5 Best Data Annotation Companies in 2025 [Services & Pricing Comparison]

Table of contents

This guide reviews the top data annotation companies in 2025—Lightly AI, Surge AI, iMerit, AyaData, and Cogito—comparing services, strengths, and pricing to help teams choose secure, scalable, and cost-effective labeling solutions.

Here is what you need to know about the top data annotation companies:

- Who are the top data annotation companies in 2025?

Leading data annotation providers include Lightly AI, Surge AI, iMerit, AyaData, and Cogito. Each excels in different areas, such as scale, automation, domain specialization, platform flexibility, and AI-assisted workflows.

- How to choose a reliable data annotation service?

Look for domain expertise aligned with your project, such as areas like computer vision or natural language processing. Make sure they follow recognized data security standards such as ISO 27001, SOC 2, or HIPAA. Evaluate their ability to scale for large projects, and see if they offer automation features that can accelerate labeling while reducing costs. Most importantly, confirm that their pricing models are transparent and align with your budget and project requirements.

- How much do annotation services cost?

Basic computer vision tasks are relatively inexpensive, typically costing $0.02 to $0.09 per object. In contrast, managed services are billed at $6 to $12 per hour, while complex Large Language Model (LLM) tasks can reach up to $100 per example. For large-scale projects, volume discounts usually kick in once you exceed 100,000 labels.

- Do these companies offer free trials or pilots?

Yes, many data annotation companies offer pilot projects or free trials. For data annotation services, Lightly offers clients the option to request free samples or pilot projects. This allows organizations to evaluate labeling quality, workflows, and domain expertise before committing to a full engagement.

Data quality sets the ceiling for how far an AI model can perform. Millions of images, videos, texts, and audio files must be labeled correctly to turn raw information into something machines can learn from. This process is called data annotation, which organizes raw data so AI can learn from it.

High-quality annotation separates reliable models from failed ones. Even the most advanced AI will produce flawed results without accurate labels, making the choice of the annotation team critical.

In this article, we’ll look at:

- Best data annotation companies in 2025

- How to choose the right data annotation company?

- Why is quality data annotation challenging?

- Lightly AI advanced data annotation services for modern AI development

With more data to label and less time to do it, teams need faster and wiser data annotation.

See Lightly in Action

Curate and label data, fine-tune foundation models — all in one platform.

Book a Demo

Best Data Annotation Companies in 2025

As AI adoption accelerates across industries, the role of data annotation companies has evolved from service providers to strategic partners.

Here, we profile the leaders shaping the future of data annotation.

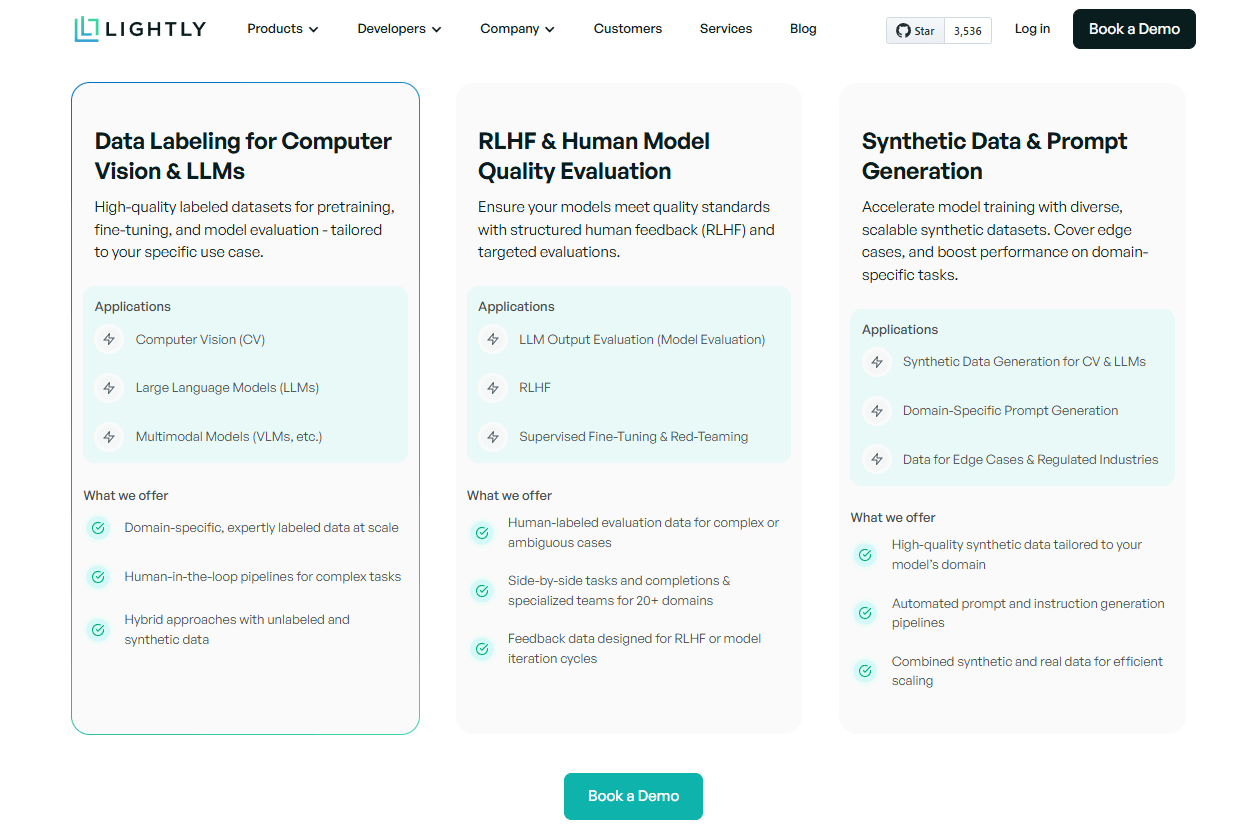

Lightly AI

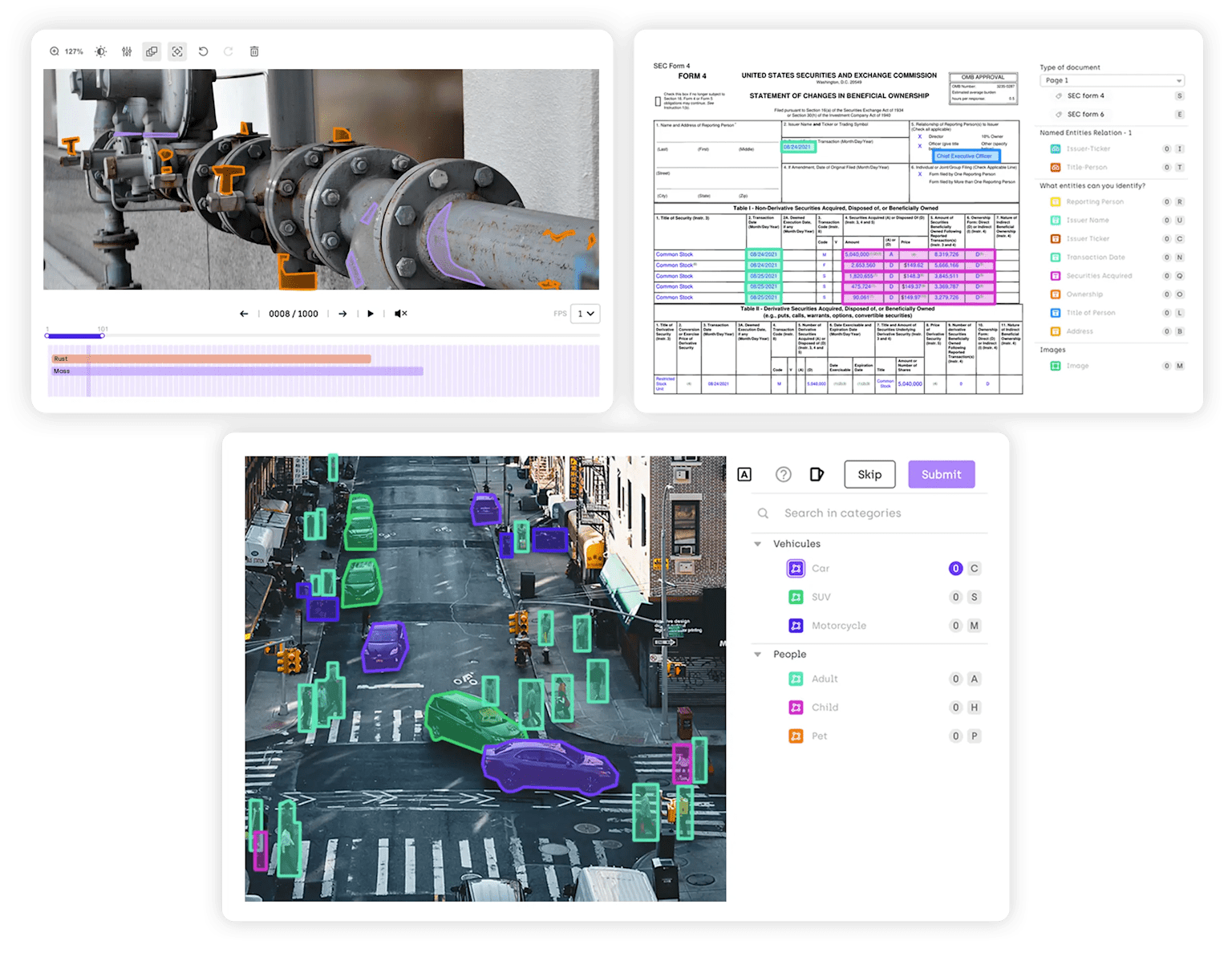

Lightly AI provides high-quality training data services for computer vision, LLMs, and multimodal models. At its core, Lightly offers data labeling and annotation services, which combine domain-specific expertise with human-in-the-loop workflows for complex tasks.

What sets Lightly apart is its broader training data suite, which includes synthetic data generation, RLHF (reinforcement learning with human feedback), and structured model evaluation. This helps teams cut labeling costs, improve dataset quality, and accelerate deployment.

Key Features

- Data Annotation for CV and LLMs: Lightly delivers high-quality annotation pipelines for images, video, and text. Human-in-the-loop review ensures accuracy in complex cases, while hybrid workflows integrate real, unlabeled, and synthetic data.

- RLHF and Human-Driven Model Evaluation: Lightly provides reinforcement learning with human feedback (RLHF) and structured evaluation services. Expert annotators generate evaluation datasets for edge cases and ambiguous inputs, while side-by-side completions support red-teaming and systematic quality scoring across 20+ domains.

- Synthetic Data & Prompt Generation: To complement annotation, Lightly creates scalable synthetic datasets that address rare scenarios or regulated industries. Automated prompt and instruction generation accelerates LLM fine-tuning and red-teaming. Blending synthetic with real labeled data enables efficient scaling without sacrificing domain coverage.

- Prediction-Aware Pretagging & Smart Selection: Lightly boosts annotation efficiency with prediction-aware pre-tagging. Using a pre-trained Faster R-CNN with a ResNet-50 backbone, raw data is automatically tagged (e.g., cars, trucks, pedestrians). These predictions inform data selection strategies that balance class distributions and enhance dataset diversity. This ensures annotation resources target the most valuable samples.

Pricing Structure

Lightly provides two main options designed for different user needs, along with a flexible pay-per-use model.

- Community (Free): Supports up to 25k images/frames (max 1024px), with active learning strategies, analytics, API/tooling integration, and cloud storage. Available at no cost for students, academics, non-profits, and OSS contributors, with support via community Discord.

- Custom / Enterprise: Extends the Team plan with unlimited datasets and advanced data types like 4K images, video, sequences, crops, LiDAR, RADAR, and medical formats. Offers on-premise or private cloud installs (Kubernetes), edge/cloud options, model and drift monitoring, security (SLA, 2FA/SSO), training, and dedicated Slack support.

Pay-per-use pricing is also available at $0.01 per input image for large ingestion workloads.

Pro Tip: Looking for the best tool to annotate your data? Check out 12 Best Data Annotation Tools for Computer Vision (Free & Paid).

Projects

Lightly has worked with Google on several LLM evaluation projects that enhanced LLM performance by 2x. Other projects include working with Protex AI to boost data annotation quality by 55% and labeling domain-specific data for Wingtra to help them reduce annotation overhead by 4x.

Industrial Video Defect Detection

Lightly helped Lythium reduce curation time by 50% and improved detection accuracy by 36% by using smart frame selection from their video data. This enabled Lythium to focus labeling efforts on the most informative frames.

Agriculture Robotics

Aigen reduced its dataset size by 80–90% and doubled deployment efficiency by eliminating large amounts of redundant data that would have needed labeling. Lightly’s system identified edge-case images across various soils, crops, and lighting conditions, exposing models to rare, critical scenarios.

Consequently, Aigen deployed models that trained faster and generalized better in diverse farming environments.

Surgical Video Frame Processing

SDSC processed 2.3 million surgical video frames in a single month, which notably cut down the time needed to prepare training data. Lightly’s selection strategies filtered for the most informative frames. This helped reduce redundant labeling and enabled a 10× faster annotation pipeline.

The curated dataset went on to power a YOLOv8 instrument detection model, delivering higher efficiency and accuracy.

Best for

Lightly is best suited for teams working on computer vision projects that involve large, complex image or video datasets. It’s ideal for organizations that want to cut down redundant data, focus on the most informative samples, and accelerate model development without ballooning annotation costs.

Surge AI

Surge AI is an RLHF and annotation infrastructure platform delivering high-fidelity human feedback and expert labeling for LLMs, content moderation, and AI safety pipelines.

It combines domain-skilled annotators, custom task schemas, and integrated tooling (API/SDK, dashboards) to support precise alignment, trustworthiness, and quality in language-data annotation.

Key Features

- RLHF-Native Workflows: Surge offers built-in task types for ranking, preference judgments, and instruction-following feedback. These workflows produce the structured signals required to train reward models and fine-tune LLMs.

- Expert Annotator Matching: Annotators are vetted for domain expertise in areas like law, medicine, and coding. Gold-standard checks and inter-annotator agreement ensure consistently high-quality labels.

- Custom Alignment & Safety Tasks: The platform supports bias detection, toxicity scoring, and compliance reviews using custom rubrics. Teams can design task guidelines tailored to their alignment and safety requirements.

- API & SDK Integration: Surge provides developer interfaces to push model outputs, launch annotation jobs, and pull structured results. This allows seamless integration into LLM training and evaluation pipelines.

- Quality Monitoring Dashboards: Real-time dashboards track throughput, annotator performance, and consensus metrics. Audit trails and re-assignment tools enforce accountability and reproducibility.

Pricing Structure

Surge AI does not publish explicit pricing tiers or rate cards. Costs are provided only through custom quotes based on task complexity, domain expertise, and project scale.

Projects

RLHF for Claude

Anthropic partnered with Surge AI to generate the large volumes of high-quality human intelligence feedback needed to train and evaluate its Claude language models.

Surge provided expert annotators across domains (law, STEM, code, safety) and custom workflows for ranking, preference judgments, and safety red-teaming. This allowed Anthropic to scale reinforcement learning from human feedback efficiently while enforcing high quality and consistency in evaluation.

Building the GSM8K Math Dataset for OpenAI

Surge AI collaborated with OpenAI to construct the GSM8K dataset, a benchmark of 8,500 grade-school math word problems with step-by-step solutions. Annotators designed and validated problems, creating a high-quality dataset used to measure and train LLMs' mathematical reasoning.

Best For

Surge AI is ideal when your annotation needs are centered on language, dialogue, safety, preference alignment, or evaluation, especially for LLMs or generative systems.

It is less suited for computer vision annotation tasks, such as bounding boxes or segmentation, unless they are part of a multimodal or risk/safety evaluation workflow.

iMerit

iMerit is a data solutions platform focused on high-accuracy annotation for complex and regulated domains, including medical imaging, autonomous systems, geospatial intelligence, and generative AI.

It delivers large-scale labeled datasets through a secure in-house workforce. This approach emphasizes quality control, compliance, and the careful handling of edge cases where model reliability is most critical.

Key Features

- Workflow Automation: The Ango Hub platform supports custom workflows, annotator and reviewer assignment, automatic pre-annotation (e.g., OCR, object proposals), and real-time QA feedback. These controls ensure consistent output, reducing rework and improving precision for downstream model training.

- Broad Multimodal Support: iMerit handles a wide range of data types, including images, video, LiDAR, audio, text, PDFs, DICOM formats, multi-frame medical images, and sensor-fusion inputs. This allows teams working with complex or regulated domains to centralize annotation without switching vendors.

- Edge Case Identification: Through its EdgeCase initiative, iMerit identifies when models fail to detect signs, reflections, and ambiguous objects, annotates them accurately, and maintains a reuse repository.

- Quality Assurance: iMerit’s QA system reduces errors and rework by using benchmark tests, consensus checks, and review loops to keep labels accurate. It follows strict security and compliance standards, giving users reliable datasets they can trust.

Pricing Structure

iMerit primarily charges based on the actual volume of annotation work. Pricing scales with factors such as task complexity, annotation type (e.g., text, images, LiDAR, medical), and turnaround requirements.

Projects

Autonomous Vehicle Edge-Case Safety

iMerit partnered with a self-driving car company to annotate over 50,000 video segments within three months, including rare events like pedestrian detection, headlight artifacts, and driver intent.

This targeted work improved model robustness in low-frequency scenarios that directly impact safety-critical performance.

E-commerce Content Enrichment & Moderation

iMerit delivered large-scale categorization, moderation, and deduplication of product listings for a large e-commerce platform. The structured output enhanced catalog integrity, reduced redundancy, and increased search and recommendation accuracy.

Best For

iMerit is best for enterprise-scale annotation in regulated, high-accuracy domains. It handles medical, AV, geospatial, and e-commerce data. This makes it suitable for organizations that need compliant annotation across multiple modalities, not just vision.

AyaData

AyaData is a full-stack AI data provider that transforms raw text, image, video, and 3D information into high-quality, precisely annotated data.

It supports human-in-the-loop and AI-assisted annotation across text, image, video, and 3D data. This enables it to deliver high-quality labeled datasets for machine learning and generative artificial intelligence.

Key Features

- Computer Vision Annotation Modes: AyaData provides multiple annotation types for images and video, including bounding boxes, polygons, segmentation, and keypoints. These options enable precise labeling of object detection, shape boundaries, and spatial relationships.

- Platform Matching & SaaS-Tool Partnerships: AyaData emphasizes “tool-agnostic” workflows, selecting annotation platforms and tools (e.g., V7, Kognic, etc.) best suited per project rather than using one fixed suite. This gives clients flexibility in format, speed, and feature set per task.

- Enterprise-Grade Security & Compliance: The company operates secure delivery centers with a full-time workforce and adheres to GDPR, HIPAA, SOC 2, and ISO 9001 standards. This ensures that sensitive data, particularly in healthcare and finance, is processed under strict security and regulatory requirements.

Pricing Structure

AyaData has transparent pricing, no hidden fees, according to their website. However, they do not publish fixed rates for specific annotation types or project tiers.

Projects

Organising Fashion for the AI Era

TRUSS, a fashion resale/retail AI company, partnered with AyaData to scale image annotation and metadata enrichment for style analytics.

AyaData handled subjective style labels (e.g., “Grunge,” “Football Hooligan”) with culturally aware taxonomies and custom workflows.

Results included over 92% annotation accuracy on style attributes, a threefold increase in image throughput, and a reduction in internal QA burden.

AI Powers Smarter Forest Growth

Oko Environmental used AyaData’s drone data and AI pipeline to monitor forest germination and environmental risk in reforestation projects.

AyaData captured drone imagery, built custom AI models, and delivered insights via interactive dashboards. The project raised germination rates from ~72% to ~94% and streamlined reporting for environmental interventions.

Best For

AyaData is best for annotation services across computer vision, NLP, and 3D sensor data. It is particularly suited for AI projects that need flexible, tool-agnostic workflows and compliant, high-quality labeled datasets.

This makes it a strong choice for fields such as autonomous driving, medical imaging, geospatial mapping, and e-commerce.

Cogito Tech

Cogito Tech provides data annotation services for ML, particularly in emotional intelligence and NLP. The company is known for image annotation services for ML, high-quality training data, and data annotation services to support large-scale training and evaluation pipelines.

Key Features

- Multi-Modality & Annotation Techniques: Supports a wide range of data types, including images, video, audio, text, documents/PDFs, and medical formats such as multi-frame DICOM. Provides advanced labeling methods like bounding boxes, polygons, semantic segmentation, keypoint annotation, LiDAR, and 3D sensor fusion.

- Scalability & Expert Workforce: Operates with a global team of 5,500+ experienced annotators across multiple secure centers. Offers project-specific workflow design, dedicated project managers, and flexible capacity to handle large-scale annotation needs.

- Platform Integrations & AI-Assisted Workflows: Cogito Tech works with multiple annotation and labeling platforms (e.g., V7, Labelbox, etc.). Uses model-assisted labeling and collaborative tools to speed up annotation, while maintaining human review and consistency.

Pricing Structure

Pricing details for Cogito are private, with costs based on data volume and flat-rate options available for larger accounts.

Projects

Turning Images into Insight

Cogito helped a client turn an underperforming spinal magnetic resonance imaging (MRI) model into a solution ready for Food and Drug Administration (FDA) and European Medicines Agency (EMA) approval.

The model had been trained on inconsistent 2D vertebra masks and struggled to deliver reliable results. Cogito deployed human annotators under the guidance of spine imaging specialists. They created precise 3D segmentation masks that worked consistently across different MRI scanners and centers.

As a result, segmentation performance rose from 68% to 91%, with much stronger generalization across datasets from multiple MRI centers.

Teaching AI to Smell

A global fragrance company needed consistent labels for scent intensity, pleasantness, and family classification, but olfactory data is subjective and hard to reproduce. Cogito created a structured framework using intensity scales, 11 fragrance families, expert sniffers, and consensus reviews in controlled labs.

The team delivered over 200,000 hours of annotations with 100% verified accuracy. This allowed the client to classify scents and predict user preferences reliably.

Best For

Cogito Tech is an excellent choice for budget-conscious healthcare projects and large AI organizations that demand customizable and scalable data annotation services.

Comparison Table

For a quick side-by-side breakdown, see the comparison table below.

How to Choose the Right Data Annotation Company?

High-quality data annotation is essential for training machine learning models and achieving accurate predictions.

The right provider should deliver precise annotations across various data types while supporting seamless integration, scalability, and security.

Multi-Modal Annotation Support

An ideal platform should support text, image, video data, audio annotation, and 3D formats. For NLP, this includes named entity recognition and sentiment analysis. Vision tasks require accurate labeling with bounding boxes and instance segmentation.

In audio transcription and speech recognition, precise annotations determine the reliability of trained models. Coverage across formats like DICOM and LiDAR ensures adaptability for healthcare, autonomous driving, and e-commerce.

AI-Assisted Labeling

Look for platforms that offer AI-driven data workflows to boost efficiency. Features such as pre-labeling, active learning, and model-in-the-loop can reduce manual effort while raising accuracy.

Systems that flag low-confidence cases help control errors and speed up annotation cycles. This allows annotators to focus on complex edge cases and deliver precise annotations where they matter most.

Scalability and Quality Assurance

Large projects often require millions of labeled samples. The platform should scale both infrastructure and workforce to handle these volumes without delays.

High-quality data depends on structured QA processes such as consensus scoring, staged reviews, and gold-standard benchmarks. Providers with demonstrated capacity and rigorous QA frameworks can deliver consistent, accurate labeling even under heavy workloads.

Seamless Integration

Annotation workflows should integrate directly into existing ML pipelines. SDKs, APIs, and connectors facilitate easy movement of data between labeling tools and storage systems.

Real-time dashboards and collaboration tools provide insight into quality and progress metrics. Flexible export formats do not need conversion overhead, so that labeled data can go directly into training AI models.

Security and Compliance

The platform you choose should meet standards such as ISO 27001, SOC 2, HIPAA, and GDPR. It should use encryption, role-based access, and audit trails to protect sensitive data.

For regulated industries, on-premises or VPC deployment adds an extra layer of control while keeping datasets compliant and secure.

Why is Quality Data Annotation Challenging?

Data annotation presents unique challenges that significantly impact artificial intelligence model performance and development timelines. Understanding these challenges helps data scientists and teams make smarter choices about how they label data and which providers they work with.

Multi-Modal Complexity

Today’s AI applications require annotation across diverse data types like images, video, text, audio data, and 3D point cloud data. Each of the data annotation types demands specialized tools and expertise.

Computer vision models vary dramatically in cost. Simple bounding boxes cost $0.02 to $0.04 per object, while complex semantic segmentation ranges from $0.05 to $5.00 per label due to pixel-level precision project requirements.

On the other hand, video footage annotation costs significantly more due to the need for frame-by-frame data labeling. Likewise, 3D point cloud annotation for autonomous vehicles is priced at a premium since it relies on specialized Light Detection and Ranging (LiDAR) expertise.

Domain Expertise Requirements

Various industry-specific applications need annotators with deep domain knowledge. For instance, medical imaging annotation requires certified professionals. This drives costs 3-5 times higher than general imagery annotation, but ensures regulatory compliance.

Autonomous vehicle projects require an understanding of traffic scenarios, sensor fusion, and safety protocols. At the same time, sentiment analysis in legal document analysis needs expertise in regulatory frameworks and terminology.

Scale and Cost Implications

Data annotation challenges, particularly in domains that require specialized industry expertise, can significantly increase costs. Medical and life sciences consistently maintain the highest prices. Large data volumes, comprising over 100,000 items, benefit from volume discounts but require a substantial upfront investment.

Recent data annotation project highlights the scale and complexity involved:

- Autonomous vehicle annotation projects often require millions of images annotated with 2D bounding boxes and 3D cuboids.

- Medical AI projects need precise anatomical labeling across DICOM formats, often handled by radiology-level experts at $50 to $100 per hour.

- Teams of over 100 specialized annotators working for months are common.

Overcoming Data Annotation Challenges

These challenges can be addressed through advanced data annotation services that combine intelligent data selection, domain expertise, and strict quality control.

The key is selecting a solution that fits the complexity, data types, and quality requirements of your large-scale annotation projects.

Lightly AI and Its Role in Data Annotation

Lightly AI is not a traditional data annotation tool. Instead, it is an end-to-end data annotation service that provides infrastructure to make annotation workflows smarter, faster, and more cost-effective.

Lightly provides use-case-specific high-quality data labeling services for pre-training, fine-tuning, and evaluating models. Through human-in-the-loop and synthetic data generation techniques, Lightly overcomes annotation gaps in complex tasks and augments unlabeled samples for robust training outcomes.

Besides these features, Lightly AI provides a full suite of products for developing scalable computer vision frameworks, as mentioned below.

Edge-Level Optimization for Annotation Pipelines with LighltyEdge

Using LightlyEdge, redundant frames are filtered out directly on the device before they reach storage or labeling. This reduces dataset size, highlights rare but important samples, and lowers the annotation workload. The result is faster processes and fewer wasted resources.

Infrastructure for Secure Annotation Workflows with LightlyOne

LightlyOne uses a worker-based architecture that keeps raw data under your control. Only metadata and embeddings are shared. This helps organizations meet strict security and compliance standards in sensitive industries.

Performance Upgrade for Annotation Workflows using LightlyOne 3.0

LightlyOne 3.0 speeds up annotation workflows by processing datasets up to six times faster while using 70% less memory. For teams, this means quicker access to curated samples, fewer bottlenecks, and a steady flow of high-value data ready for labeling.

Why It Matters

Lightly enhances data annotation projects by using state-of-the-art (SOTA) technology to offer enterprise-grade quality labeling services and products. It helps teams get more out of every annotation cycle with measurable impact. It reduces labeling costs by up to four times, improves data quality, and accelerates deployment.

Conclusion

High-quality data annotation underpins reliable results for AI models. The right provider combines accuracy, scalability, and secure workflows with strengths like speed, automation, and multilingual expertise.

AI-assisted labeling, active learning, and pilot projects further cut manual effort and help validate quality before scaling. As the field evolves, annotation will increasingly be shaped by automation features, edge-level intelligence, and tighter integration with model training.

Stay ahead in computer vision

Get exclusive insights, tips, and updates from the Lightly.ai team.

.png)

.png)

.png)