Train Test Validation Split: Best Practices & Examples

Table of contents

The train-test-validation split is a best practice in machine learning to ensure models generalize well. Training data teaches the model, validation fine-tunes it, and the test set provides an unbiased evaluation on unseen data.

Understanding train test validation split is crucial for preventing overfitting and obtaining an unbiased assessment of model performance before deployment. Here’s a quick summary of key takeaways.

What is the train test validation split?

It is the process of dividing a dataset into three distinct subsets, which include training, validation, and test. Each subset serves a specific purpose in model development and evaluation.

- Training Set: The largest portion, used to train the machine learning model and optimize its internal model parameters.

- Validation Set: Used during the iterative process of model development to fine-tune the model's hyperparameters (like learning rate or regularization strength) and assess intermediate model performance to detect overfitting.

- Test Set: A completely held-out portion, used only once at the very end to provide an unbiased estimate of the final model accuracy on truly unseen data in real-world scenarios.

Why is train test validation split crucial for machine learning models?

It simulates how a model would perform on new, unseen data. This prevents the model from simply memorizing the training data (overfitting) and ensures it generalizes well to real-world applications.

What are the typical split ratios?

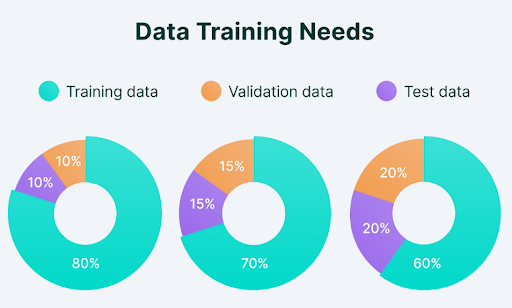

Common split ratios include 70% training, 15% validation, 15% test, or 80% training, 10% validation, 10% test. For simpler train-test splits, 75% training and 25% testing, or 80% training and 20% testing, are common.

What happens if a model does not split its data properly?

Not splitting, or improper splitting, can lead to overfitting. This is where the model performs exceptionally well on the data it was trained on, but poorly on new, unseen data. Overfitting results in a biased and unreliable assessment of model accuracy.

How does train test validation split relate to hyperparameter tuning and model evaluation?

The validation set is specifically used for hyperparameter tuning. It helps select the best model parameters without touching the final test set. The test set then provides the ultimate, unbiased model evaluation.

Building a machine learning model that performs exceptionally well on training data is one thing. The true measure of a machine learning model is how well it can generalize and make accurate predictions on completely unseen data in real-world scenarios.

This is where the concept of the train-test-validation split becomes a critical step in any ML project.

In this guide, we'll walk you through everything you need to know about splitting your data for AI model development.

Here’s what we will cover:

- Training, validation, and test sets: The core components

- Data splitting strategies: Best practices

- Implementing train test validation split in practice

- How to use Lightly for training, validation, and test splits

Getting the split right is important, but the quality of the data you put into each set matters just as much. At Lightly, we help you make smarter choices about what data to train, validate, and test on:

- LightlyOne: Curate the most representative and diverse samples for each split to ensure balanced coverage and fewer biases.

- LightlyTrain: Pretrain and fine-tune models to maximize performance from your carefully selected data.

Together, they help your models generalize better and deliver more reliable results in real‑world scenarios.

Training, Validation, and Test Sets: The Core Components

Before we dive into the data splitting strategies and best practices, let’s break down the role of each set of data.

The Training Set

The training set keeps most of the available data (typically 60-80% of the total dataset) and is used to teach the ML model.

The model learns the hidden features and patterns in the data. It improves its ability to link inputs to the right outputs based on the accuracy of its guesses and by adjusting its internal parameters.

Key Consideration: Your training set must be large enough (but not so large that the model overfits) and diverse to cover all scenarios. If it's too small or lacks diversity, the model might underfit (fail to learn the patterns) or learn biased patterns.

For example, if you want more diversity in your image data, with balanced classes to avoid overfitting and underfitting, you can do so with the LightlyOne Selection feature.

It allows you to specify a target class distribution and select images based on thresholding. For example, you can remove blurry images and choose the sharpest ones, among other options.

💡Pro Tip: To avoid overfitting when selecting train, validation, and test splits, our Overfitting article gives practical advice on detecting and preventing models from memorizing your data.

Below is the example code to select the samples from the raw data based on the classes. For more details, see the complete guide for common selection use cases.

{

"n_samples": 100, # set to the number of samples you want to select

"strategies": [

{

"input": {

"type": "PREDICTIONS",

"task": "lightly_pretagging", # (optional) change to your task

"name": "CLASS_DISTRIBUTION"

},

"strategy": {

"type": "BALANCE",

"distribution": "TARGET", # only needed for LightlyOne Worker version >= 2.12

"target": {

"car": 0.1,

"bicycle": 0.5,

"bus": 0.1,

"motorcycle": 0.1,

"person": 0.1,

"train": 0.05,

"truck": 0.05

}

}

}

]

}See Lightly in Action

Curate and label data, fine-tune foundation models — all in one platform.

Book a Demo

The Validation Set

The validation set is a separate piece of data that we use to validate our model's performance during training.

Unlike the training data, the model doesn’t learn directly from the validation set. Instead, it helps assess how well the model can handle new, unseen data.

The validation process also helps fine-tune the model’s hyperparameters and configurations to improve its performance.

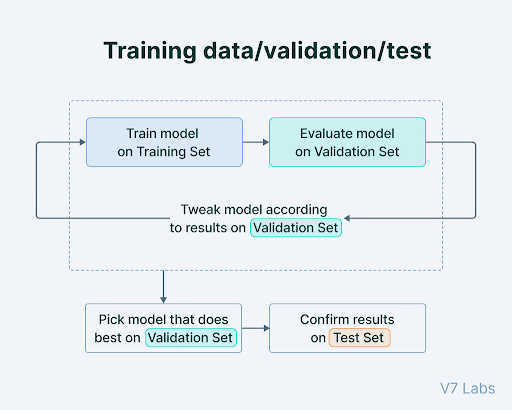

The usual process for model development and hyperparameter tuning follows a repetitive cycle:

- The model is initially trained on the training set.

- The model's performance is evaluated on the validation set, either periodically or at the end of an epoch.

- Adjustments are made to hyperparameters like learning rate and regularization based on validation results. Changes can also be made to the model design, such as modifying layers or activation functions.

- Steps 1 to 3 are repeated until the model performs well enough on the validation set.

Key Consideration: This set is a fair substitute for the test set during development. If you tune the model too much to the validation set, you might overfit it and make it less likely to perform well on new data later.

The Test Set

The test set is an entirely separate part of the data (typically 10-20% of the total dataset), kept aside from both training and validation. It is used to test the model after completing the training.

It provides an unbiased evaluation of how well the model generalizes to unseen data and assesses its capabilities in real-world scenarios.

Key Consideration: Never use the test set to make decisions about your model, such as selecting hyperparameters. Even evaluating its results to see if you should keep training constitutes a form of "peeking" that compromises its role as an unbiased evaluator.

We’ve prepared a table with a summary of each data set's unique role, purpose, and timing in the machine learning workflow.

Why Three Sets Are Often Better Than Two

Using two groups, for training and testing, is common. But dividing data into three parts, training, validation, and testing, is generally better.

The primary reason for having a separate validation set is to avoid using the test set repeatedly to tune the model. Doing so causes the test set to lose its ability to give an unbiased performance estimate for new data.

Common split ratios vary based on dataset size and specific needs. A 75% training and 25% testing split is the default in libraries like scikit-learn, with 80/20 also common. For a three-split, common ratios are 60/20/20, 80/10/10, and 70/15/15.

Smaller percentages like 98% training, 1% validation, and 1% testing can still be effective for large datasets, such as those with millions of records. Even 1% of a large dataset represents a statistically relevant and substantial portion of the data.

💡Pro Tip: When evaluating object detection models it is important to choose your train validation and test splits carefully so your mAP reflects true performance rather than overfitting look at our Object Detection guide for details on how detection evaluation differs from classification.

Pro tip: Splitting your data the right way is only half the battle - choosing the right tools matters too. Check out our list of the Top Computer Vision Tools in 2025 to find the best fit for your next project.

Common Data Splitting Strategies: Best Practices

When preparing datasets for machine learning, there is no single best way to split the data. The right strategy depends on the type of data you have (images, time series, grouped samples, etc.) and the challenges you want to avoid (bias, imbalance, or leakage).

Below are the most common splitting strategies, along with when to use them.

- Random Splitting

Random sampling mixes up all the data and then splits it into training, validation, and test groups based on certain percentages.

Distributing data randomly helps prevent biases that might otherwise consistently go into a single subset.

Random split is best used with large, varied datasets where each data point is independent and evenly represented. But it is not the correct approach with imbalanced datasets.

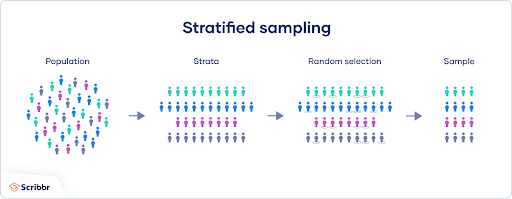

- Stratified Splitting

Stratified splitting divides the dataset into parts while keeping the original proportion of classes or categories in each subset (training, validation, and test).

It is best to use it for imbalanced datasets, where some classes have very few samples compared to others.

A random split might accidentally put all your rare class examples into the test set, leaving none for the model to train on. But stratified split ensures that rare classes are adequately included in each set to avoid bias in the final model.

For example, if your dataset has 90% cats and 10% dog images, then a stratified split will ensure that 90% cats and 10% dog images are included in your training, validation, and test sets.

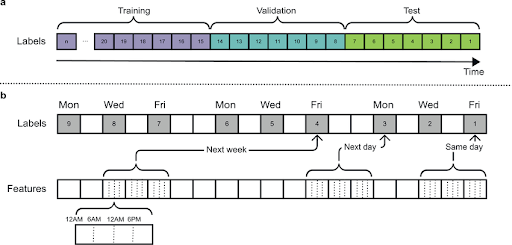

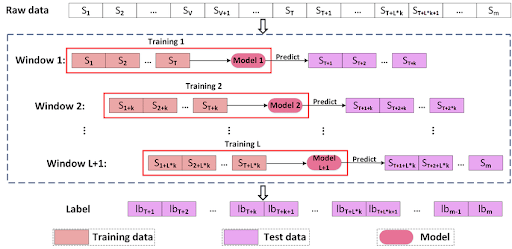

- Time-Based Splitting

When working with time series data, such as stock prices, weather patterns, or sensor readings, using standard random splitting is inappropriate. Instead, split the data based on time (time-based splitting), using earlier data for training and later data for testing.

The time-based splitting approach reflects real-life scenarios where models predict future events using only past data. This method helps prevent data leakage from the future into training.

Advanced methods, like rolling window validation or walk-forward validation, move the training period forward in time step by step, testing the model on the next period each time. This technique allows the model to be updated continuously.

- Group Splitting

Group splitting is for datasets where data points are not independent and connected to logical groups. For example, multiple samples might come from the same patient, or different frames from the same video.

In such cases, group splitting ensures that all data points from a single group are kept together in either the training, validation, or test set to avoid splitting between sets.

For example, instead of dividing video frames randomly, it's better to split entire videos, like videos 1, 3, 7, and 10 for training, videos 2, 4, 6 for validation, and others for testing.

This approach is critical since data points within a group often relate to each other, and mixing them up can lead to an unfair evaluation of the model's performance.

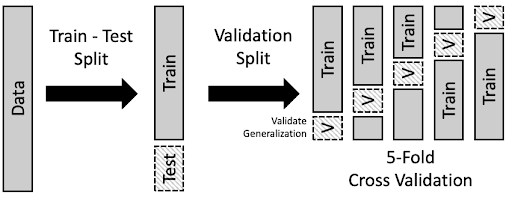

- Cross-Validation Splitting

Cross-validation sampling splits a dataset into training and validation sets for cross-validation purposes.

It creates multiple data subsets, each used as a training or validation set in different cross-validation iterations.

Cross-validation splitting stops the model from overfitting, or becoming too fitted to the training data, and gives a more reliable measure of its performance.

- K-Fold Cross-Validation: It divides the dataset into k equal-sized sections called 'folds'. The model trains on most of these folds and tests on one. Next, it repeats this process so each fold serves as the test set once. The results are averaged to get a better idea of how the model performs overall.

- Stratified K-Fold Cross-Validation: Stratified K-Fold splits the data so that each fold has the same proportion of different groups or classes as the original data. It helps when the data is imbalanced, as it ensures a fairer evaluation. It also enables the model to learn better to predict all groups accurately.

Domain-Aware Splitting

Standard splits assume that test data comes from the same distribution as training data (independent and identically distributed or i.i.d.), which often isn't true in practice.

Real-world applications encounter a domain shift, where unseen deployment data differs greatly from the training data. We need to move beyond the i.i.d. assumption and adopt domain-aware splitting strategies to overcome the shift challenges.

Domain-aware sampling strategies like adversarial validation use a discriminator model to distinguish between training data and testing or validation data.

If the discriminator can easily differentiate between the sets, it indicates a domain shift. That information is then used to create better data splits or improve strategies to adjust to different data domains.

Similarly, domain-free adversarial splitting (DFAS) creates train and validation splits by maximizing the domain differences between the two. Unlike an adversarial process, DFAS generates splits as challenging as possible, and then the model is trained to generalize across that boundary.

Take a look at this table for a quick comparison of the most common data splitting methods.

Implementing Train Test Validation Split in Practice

Besides selecting the right splitting method, following best practices is also crucial to keep the data accurate and the machine learning model trustworthy.

Preventing Data Leakage: The Silent Killer of Model Performance

Data leakage occurs when information from the test set or validation sets unintentionally contaminates the training data, which affects the model training process.

It can make the model appear more accurate than it truly is during evaluation, but it may perform poorly in real-world situations.

There are several types of data leakage, such as target leakage. This happens when information related to the target variable is included as a feature, even though it wouldn't be available at the time of prediction.

Similarly, feature leakage occurs when a feature indirectly contains information too closely related to the target data.

Strategies to Overcome Data Leakage

To prevent data from leaking between training and testing, you can use these strategies:

- Isolate Test Set Early: Always set aside the test set before doing any data preprocessing, feature engineering, or data augmentation. It should stay completely unseen until testing.

- Apply Transformations Consistently: Any changes, like scaling or normalization, should be based only on the training data. When applying these to validation or test data, use the same settings without recalculating.

- Time-Aware Splitting: For time series, split data in chronological order so that future data doesn’t affect past predictions.

- Group-Aware Splitting: For correlated data, like by patient ID or video sequence, keep whole groups together in one split.

💡Pro tip: Looking for the perfect data annotation tool? Check out 12 Best Data Annotation Tools for Computer Vision (Free & Paid).

Data Augmentation and Data Splitting Order

Data augmentation, especially in computer vision, is a useful technique because it increases the size and diversity of the training data. It does so by applying various transformations, such as rotating, flipping, cropping, or changing the colors of images.

The best approach when applying augmentation is first to split the data into training and validation sets.

If data augmentation is done before splitting or applied to validation or test data, it can cause the same original data to appear in multiple splits. This leads to an overly optimistic estimate of the model’s accuracy as the model is tested on data that is too similar to its training data.

Also, augmenting the validation data makes it less valuable because real-life data isn't usually augmented.

Mistakes You Need to Avoid When Data Splitting

Getting your data split wrong can invalidate all your hard work. Here are some common pitfalls to avoid.

- Creating a Poor Training Set: If your training set is too small, not diverse enough, or of low quality, the model won't have enough good data to learn from. Ensure the quality of your data before feeding it into the model.

- Improper Shuffling or Sorting: In most cases, you should shuffle your data before splitting to ensure randomness. If not shuffled accurately, it can lead to biased models.

- Over-tuning to the Validation Set: It's easy to fall into the trap of tweaking your model until it gets a perfect score on the validation set. However, if you overdo this, you risk fitting the model too specifically to that data, leading to overfitting. Avoid repeatedly overfitting to the validation set.

How to use Lightly for Training, Validation, and Test Splits

When you get started with a computer vision project, there is an immense amount of raw data to handle, and labeling all of it is expensive.

Using random splitting for training data and labeling may result in redundant images or missing rare scenes that could potentially undermine the model's performance.

The Lightly platform helps you pick the best data and create splits with minimal manual effort. It uses embedding-based strategies like DIVERSITY and TYPICALITY to ensure the data is diverse, representative, and of high quality.

With LightlyOne, you can scale active learning. Use a raw image, select the most valuable subsets for training, validation, and testing, and optimize the labeling process.

Let’s see how we can do this.

1. Install the Lightly Python client package

!pip install lightly2. Download the LightlyOne Worker

!docker pull lightly/worker:latest

!docker run --shm-size="1024m" --rm lightly/worker:latest

sanity_check=True3. Prepare Image Data

We'll download and use the CIFAR-10 dataset here. Alternatively, you can use your own dataset or the sample clothing dataset from Lightly.

!curl -L -o cifar-10-python.tar.gz

https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gzUnzip the image data to a folder:

!tar -xzf cifar-10-python.tar.gzThen, convert batches to PNG images:

import os

import pickle

import numpy as np

from PIL import Image

# Helper to load a batch file

def load_batch(file):

with open(file, 'rb') as f:

return pickle.load(f, encoding='bytes')

# Create output directories

os.makedirs('cifar_images', exist_ok=True)

# Process training batches

for batch_id in range(1, 6):

batch = load_batch(f'D:\lightly\cifar-10-batches-py\data_batch_{batch_id}')

data = batch[b'data'] # shape (10000, 3072)

for i in range(len(data)):

img = data[i].reshape(3, 32, 32).transpose(1, 2, 0)

img = Image.fromarray(img)

img.save(f'cifar_images/img_{batch_id}_{i}.png')4. Schedule a Selection run

Get your LightlyOne token from app.lightly.ai/preferences. Set it as LIGHTLY_TOKEN in your environment or code.

from os import linesep

from pathlib import Path

from datetime import datetime

import platform

import torch

from lightly.api import ApiWorkflowClient

from lightly.openapi_generated.swagger_client import DatasetType, DatasourcePurpose

###### CHANGE THESE 2 VARIABLES

LIGHTLY_TOKEN = "CHANGE_ME_TO_YOUR_TOKEN" # Copy from https://app.lightly.ai/preferences

DATASET_PATH = Path(r"D:\lightly\cifar_images") # e.g., Path("/path/to/images") or Path("clothing_dataset")

######

assert DATASET_PATH.exists(), f"Dataset path {DATASET_PATH} does not exist."

# Create the LightlyOne client to connect to the API.

client = ApiWorkflowClient(token=LIGHTLY_TOKEN)

# Create the dataset on the LightlyOne Platform.

# See our guide for more details and options:

# https://docs.lightly.ai/docs/set-up-your-first-dataset

client.create_dataset(

dataset_name=f"first_dataset__{datetime.now().strftime('%Y_%m_%d__%H_%M_%S')}",

dataset_type=DatasetType.IMAGES,

)

# Configure the datasources.

# See our guide for more details and options:

# https://docs.lightly.ai/docs/set-up-your-first-dataset

client.set_local_config(purpose=DatasourcePurpose.INPUT)

client.set_local_config(purpose=DatasourcePurpose.LIGHTLY)

# Schedule a run on the dataset to select 50 diverse samples.

# See our guide for more details and options:

# https://docs.lightly.ai/docs/run-your-first-selection

scheduled_run_id = client.schedule_compute_worker_run(

worker_config={"shutdown_when_job_finished": True},

selection_config={

"n_samples": 500, # set to the number of samples you want to select

"proportion_samples": 0.1,

"strategies": [

{

"input": {

"type": "EMBEDDINGS"

},

"strategy": {

"type": "DIVERSITY"

}

},

{

"input": {

"type": "METADATA",

"key": "lightly.sharpness" #remove e.g. blurry images, which equals selecting samples

},

"strategy": {

"type": "THRESHOLD",

"threshold": 20, # whose sharpness is above a threshold

"operation": "BIGGER"

}

},

]

},

)

# Print the next commands

gpus_flag = "--gpus all" if torch.cuda.is_available() else ""

omp_num_threads_flag = " -e OMP_NUM_THREADS=1" if platform.system() == "Darwin" else ""

print(

f"{linesep}Docker Run command: {linesep}"

f"\033[7m"

f"docker run{gpus_flag}{omp_num_threads_flag} --shm-size='1024m' --rm -it \\{linesep}"

f"\t-v '{DATASET_PATH.absolute()}':/input_mount:ro \\{linesep}"

f"\t-v '{Path('lightly').absolute()}':/lightly_mount \\{linesep}"

f"\t-e LIGHTLY_TOKEN={LIGHTLY_TOKEN} \\{linesep}"

f"\tlightly/worker:latest{linesep}"

f"\033[0m"

)

print(

f"{linesep}Lightly Serve command:{linesep}"

f"\033[7m"

f"lightly-serve \\{linesep}"

f"\tinput_mount='{DATASET_PATH.absolute()}' \\{linesep}"

f"\tlightly_mount='{Path('lightly').absolute()}'{linesep}"

f"\033[0m"

)

5. Process the run with the LightlyOne Worker

Run the Docker command, and the worker will take a while to process your dataset.

!docker run --shm-size=1024m --rm \

-v /d/lightly/cifar_images:/input_mount:ro \

-v /d/lightly/lightly:/lightly_mount \

-e LIGHTLY_TOKEN=5d4931241a87a7c3cb9d40da3fb683c8231020ccd6cbd599 \

lightly/worker:latest

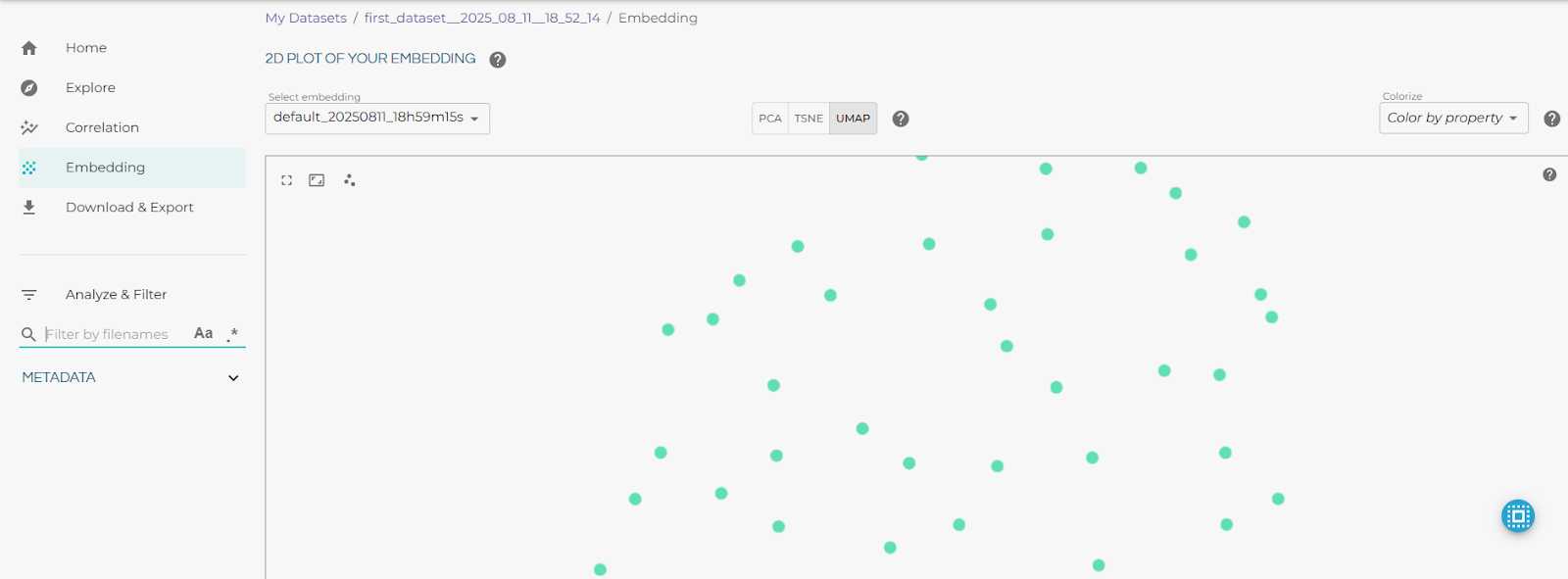

6. Explore the selected training set

Next, you can view and explore the selected training dataset interactively on the LightlyOne platform by using the Lightly serve command.

!lightly-serve \

input_mount='D:\lightly\cifar_images' \

lightly_mount='d:\lightly\lightly'

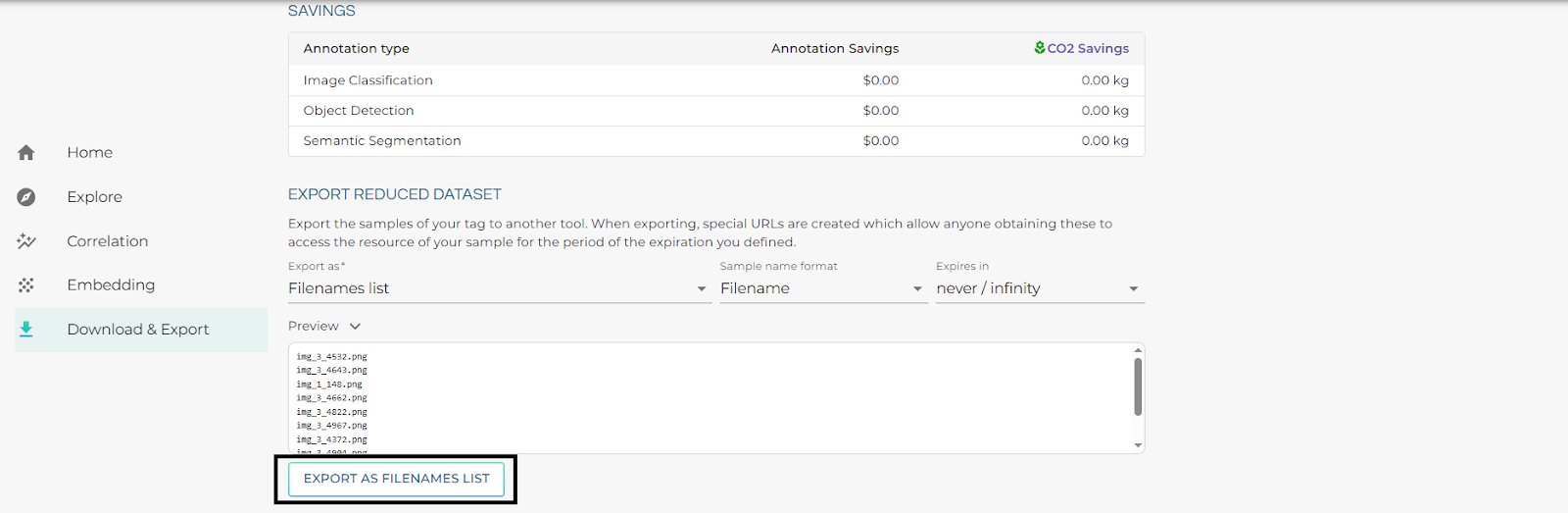

Download the text file (.txt) containing the selected training image filenames. Then place it in the working directory.

The text file contains the names of the most diverse images selected by LightlyOne. The code below will copy the chosen training images from your raw image folder to a separate folder for easy access.

import shutil

import os

selected_train = []

with open("filenames-first_dataset__2025_08_11__17_39_26-20250811_13h55m11s-1754920515135.txt") as f:

for line in f:

fname = line.strip().split(",")[0] # if CSV with scores, take first column

selected_train.append(fname)

# Create the destination folder for selected images

os.makedirs('cifar10_split/train', exist_ok=True)

# Copy the selected images from cifar_images to the new folder

for fname in selected_train:

src = os.path.join('cifar_images', fname) # Source: cifar_images folder

dst = os.path.join('cifar10_split/train', fname) # Destination: new folder

if os.path.exists(src): # Check if source file exists

shutil.copy(src, dst)

print(f"Copied: {fname}")

else:

print(f"File not found: {src}")Now that we have a training set, we use the DIVERSITY and THRESHOLD strategies to ensure maximum visual coverage and reduce redundancy. We set n_samples to 500 (select 80% or 70% data based on your total data).

You can repeat the same approach (create a new selection run) to create validation and test sets, but use different sampling strategies.

Use the TYPICALITY strategy for a validation set that reflects the overall dataset distribution, and use different n_samples (like 10% or 15%).

For the test set, use the DIVERSITY strategy to cover edge cases and challenging examples that test model robustness. Set n_samples to a different number (10-15% of data).

Also, be sure to exclude the already chosen training images from the new selections. When running validation selection, exclude training images, and when running test selection, exclude both training and validation images.

Using LightlyTrain on the Training Set

With the training set in hand (cifar10_split/train), we can pretrain a model using LightlyTrain. LightlyTrain is a self-supervised training framework.

1. Install the LightlyTrain package

!pip install lightly-train2. Pre-train the model

import lightly_train

dataset_path = "./cifar10_split/train"

if __name__ == "__main__":

lightly_train.train(

out="out/my_experiment", # Output directory

data=dataset_path, # Directory with images

model="torchvision/resnet50",

epochs=2, # Number of epochs to train

batch_size=32, # Batch size

)

Once the training is complete, the out/my_experiment directory contains the following files:

out/my_experiment

├── checkpoints

│ ├── epoch=99-step=123.ckpt # Intermediate checkpoint

│ └── last.ckpt # Last checkpoint

├── events.out.tfevents.123.0 # Tensorboard logs

├── exported_models

| └── exported_last.pt # Final model exported

├── metrics.jsonl # Training metrics

└── train.log # Training logsThe final model is exported to out/my_experiment/exported_models/exported_last.pt in the default format of the used library.

Now it can be directly used for fine-tuning for tasks such as classification, detection, or semantic segmentation.

Key Takeaways: Mastering Data Splitting

The train test validation split brings reliability in building machine learning models. The way you split your data directly affects how well your model learns, adapts, and performs in the real world.

From simple random splits to more advanced strategies to handle time, imbalance, or domain shifts, the best approach depends on your data and goals.

With the right strategy and a bit of experimentation, you can create splits that measure performance accurately and prepare your model to face the challenges of unseen data.

Stay ahead in computer vision

Get exclusive insights, tips, and updates from the Lightly.ai team.

.png)

.png)

.png)