Lightly vs. Ultralytics: Technical Comparison

Table of contents

Lightly is a data-centric platform offering dataset insights, self-supervised learning, fine-tuning, auto-labeling, and continuous curation. Ultralytics is a model-centric YOLO ecosystem focused on fast training, deployment, and inference.

Lightly vs. Ultralytics: Data-Centric vs. Model-Centric Vision Workflows

- Lightly is a data-centric, all-in-one platform for computer vision teams. It offers dataset understanding, self-supervised pretraining, fine-tuning, auto-labeling, and continuous data curation, covering the entire ML lifecycle from raw images to production-ready models.

- Ultralytics is a model-centric ecosystem built around state-of-the-art object detection and segmentation pipelines. It streamlines training, deployment, and inference of YOLO models through a unified CLI and web-based HUB.

Introduction

Traditional computer vision workflows use multiple tools for data management, labeling, and model training that are inefficient and difficult to scale.

To address this, all-in-one platforms have emerged to speed up the computer vision pipeline by combining these steps.

Two leading solutions in this space are Lightly and Ultralytics. Both aim to make it easier to go from raw images to models ready for use, but they take different approaches to do so.

In this article, we will compare Lightly vs. Ultralytics across features, workflows, and practical applications to help you decide which is the right fit for your computer vision stack.

See Lightly in Action

Curate and label data, fine-tune foundation models — all in one platform.

Book a Demo

Tool Overviews: Data Curation Platform vs. Object Detection Framework

Before we dive into the detailed comparison, let’s review the profiles of each tool and its role in a computer vision workflow.

Lightly: The All-in-One Platform for Vision Data and Models

Lightly is a computer vision suite that makes vision data curation, annotations, self-supervised pretraining, and active learning accessible and efficient for ML teams.

It enables users to utilize vast amounts of unlabeled data to pretrain models and select the most valuable samples for labeling.

Lightly automates data pipelines to improve model performance while reducing labeling costs and time to deployment.

Lightly, primary offerings include:

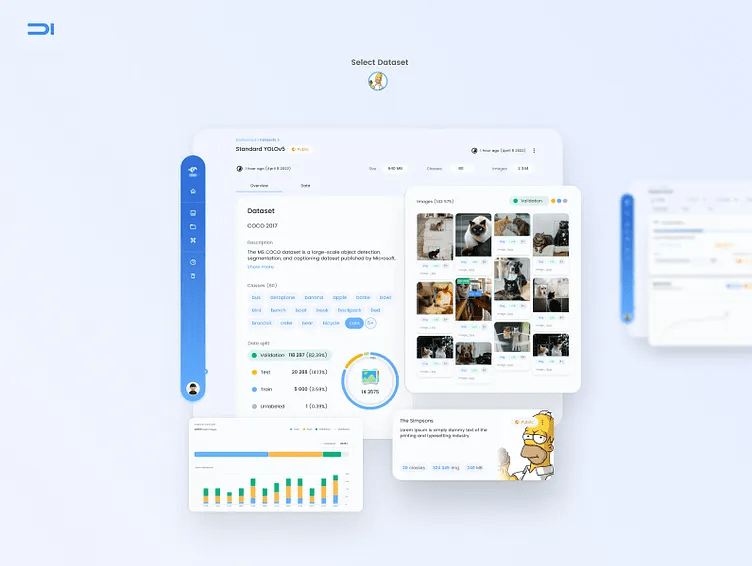

- LightlyStudio: It is a unified platform for dataset curation, visualization, annotation, and quality assurance (QA).

- LightlyTrain: Uses state-of-the-art self-supervised learning (SSL) to pretrain models on unlabeled data or distill knowledge from large foundation models.

- LightlyEdge: A SDK for smart data selection at the point of collection. It runs on-device to select only the most valuable data to be uploaded and addresses data transfer to storage costs at their source.

Ultralytics: The YOLO Ecosystem and HUB

Ultralytics is known for its YOLO (You Only Look Once) family of object detection models. Its ecosystem is built to make training, using, and deploying YOLO models as fast and accessible as possible.

The ecosystem consists of two primary offerings:

- YOLO Models: Ultralytics develops and maintains YOLO models, including YOLOv8, YOLO12, and the upcoming YOLO26. These models balance speed and accuracy well, and can perform various tasks such as object detection, image classification, segmentation, and pose estimation.

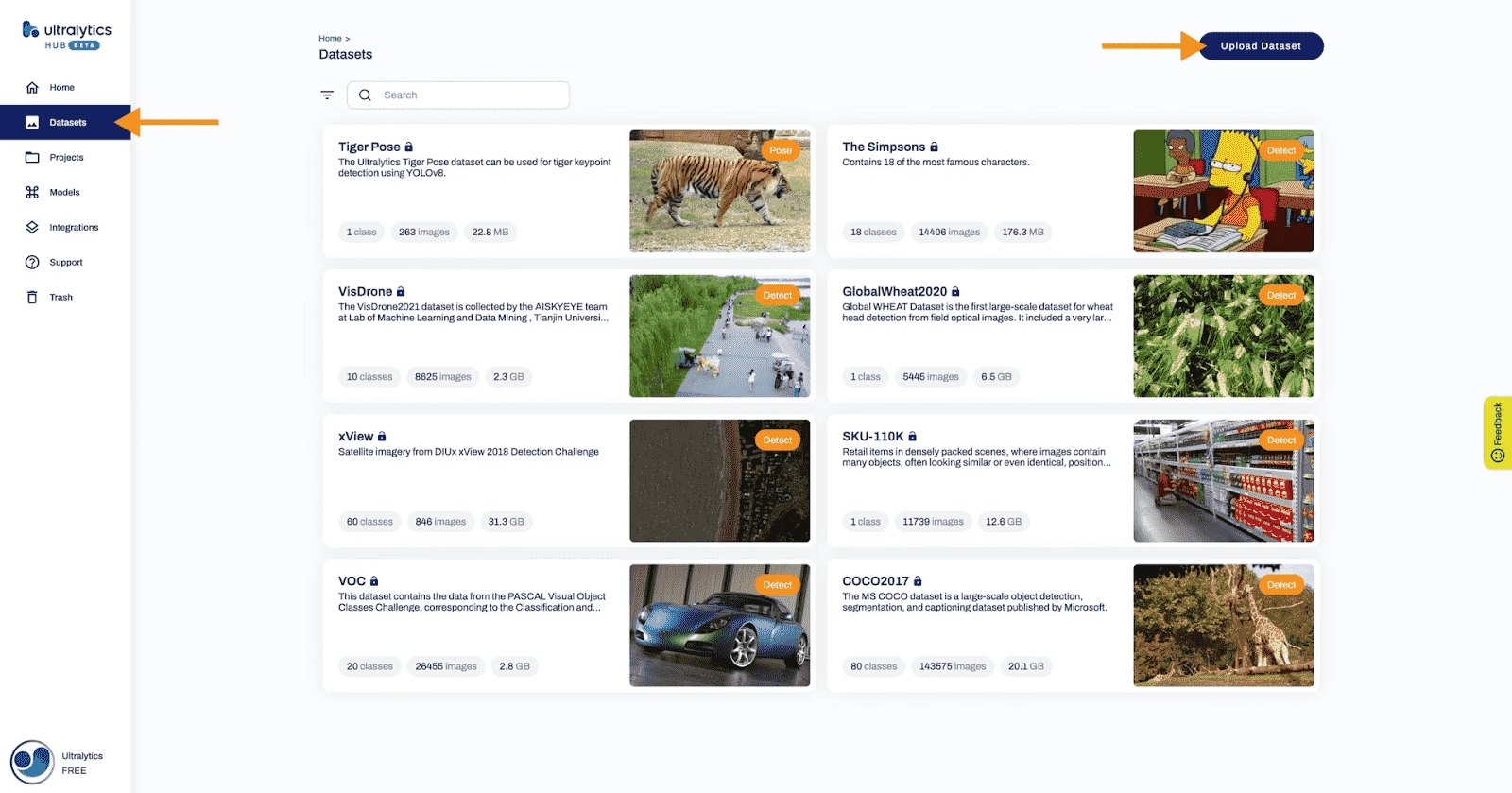

- Ultralytics HUB: A no-code cloud-based platform for customizing YOLO models. It allows users to upload their datasets, train the models, monitor the results, and deploy them through a web-based interface.

💡 Pro Tip: If you are evaluating detection and segmentation stacks for practical deployment, the Computer Vision Applications article helps you match model capabilities to real use cases.

Core Comparison of Lightly vs. Ultralytics

The primary difference between Lightly and Ultralytics is in their starting point.

Lightly starts with your raw, messy data and helps you refine it. Ultralytics starts with a model and provides a simple path to train it on your prepared training data.

Let's compare them across key dimensions and how they meet the needs of computer vision projects.

Data Curation and Management

Lightly

LightlyStudio offers a unified environment where you can explore datasets, annotate images, videos, and other formats.

It lets you manage different versions of your data with tags and supports inline labeling with robust quality assurance workflows.

You can connect LightlyStudio to a data source from cloud storage, such as an S3 bucket with millions of images, or work from a local system.

Once connected, it then generates embeddings for all images and helps you to understand the dataset's structure, find clusters, duplicate samples, and spot data biases.

Lightly uses multiple active learning data selection strategies like diversity sampling and metadata thresholding to identify the most impactful data for model training.

It greatly reduces the volume of data requiring manual labeling to save teams both time and resources without compromising on model performance.

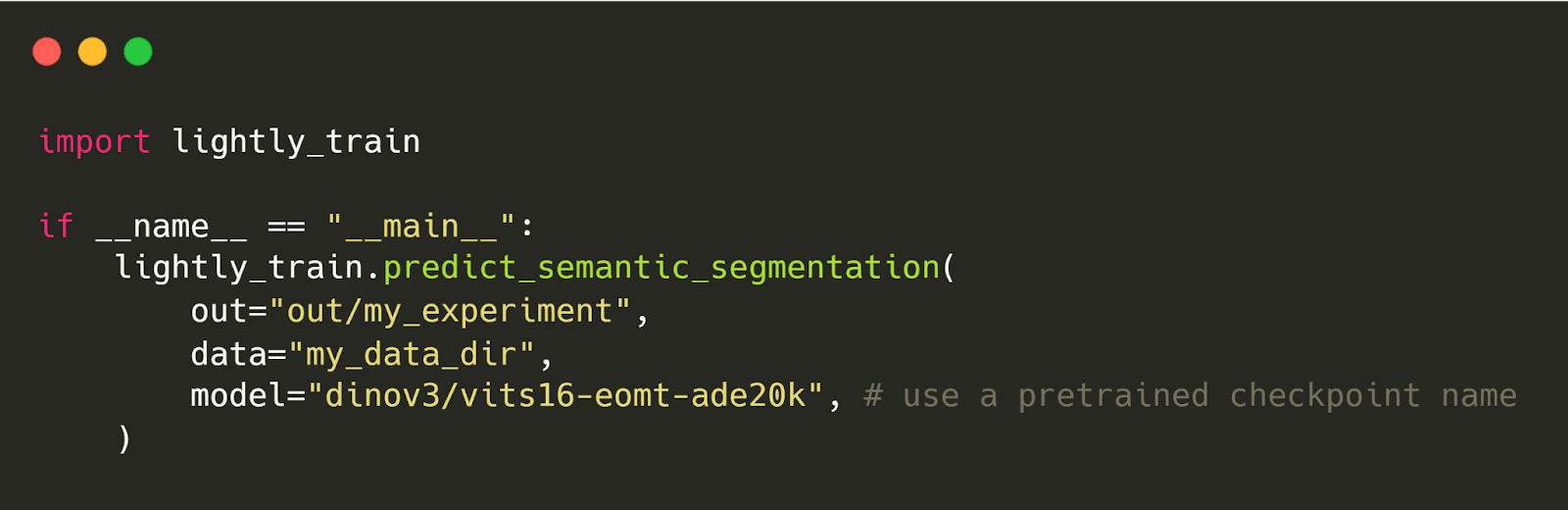

Furthermore, LightlyTrain features an auto-labeling option (currently for semantic segmentation masks).

You can use it to automatically generate pseudo-labels for your unlabeled images using a pre-trained model checkpoint. These auto-generated labels can then be used for further training of the model.

Ultralytics

Ultralytics treats data curation as a problem that is already solved before you use the platform.

You have basic dataset management features like organizing datasets and basic visualization in Ultralytics HUB, but curation is not its key focus.

To work with your data, you start by uploading datasets to HUB or connecting external tools like Roboflow.

Also, Ultralytics does not have its own labeling tool, so you need to use external annotation services for labeling or fixing your data before training.

It makes the workflow efficient for model development, but less flexible when it comes to controlling or iterating on the dataset’s curation.

💡 Pro Tip: When comparing Lightly and Ultralytics in a real workflow, our Top Computer Vision Tools article helps you understand which tools complement each ecosystem best.

Model Training and Development

Lightly

Lightly focuses on improving the initial stages of training ML models (pretraining for a better base model) before fine-tuning them through LightlyTrain.

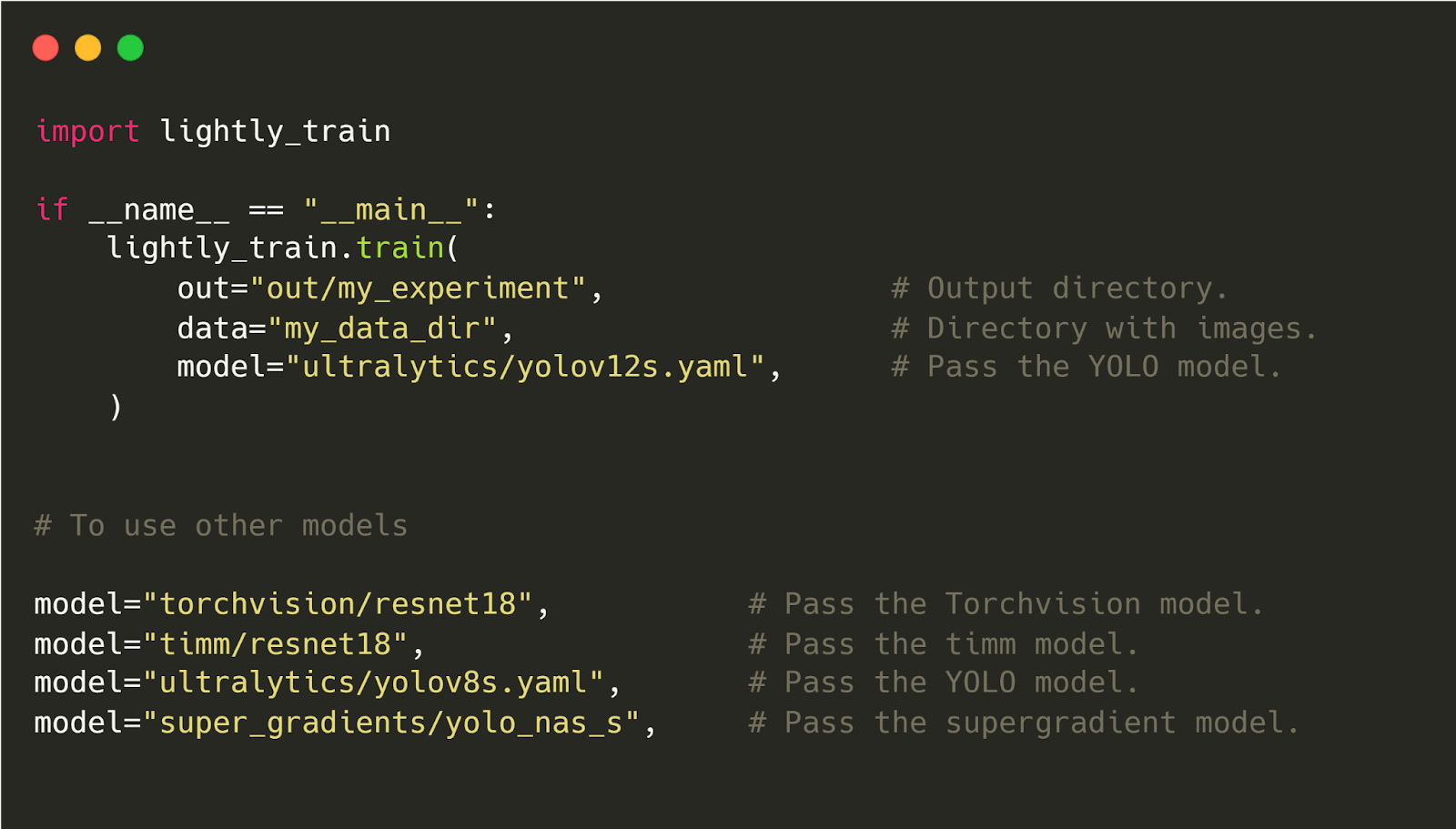

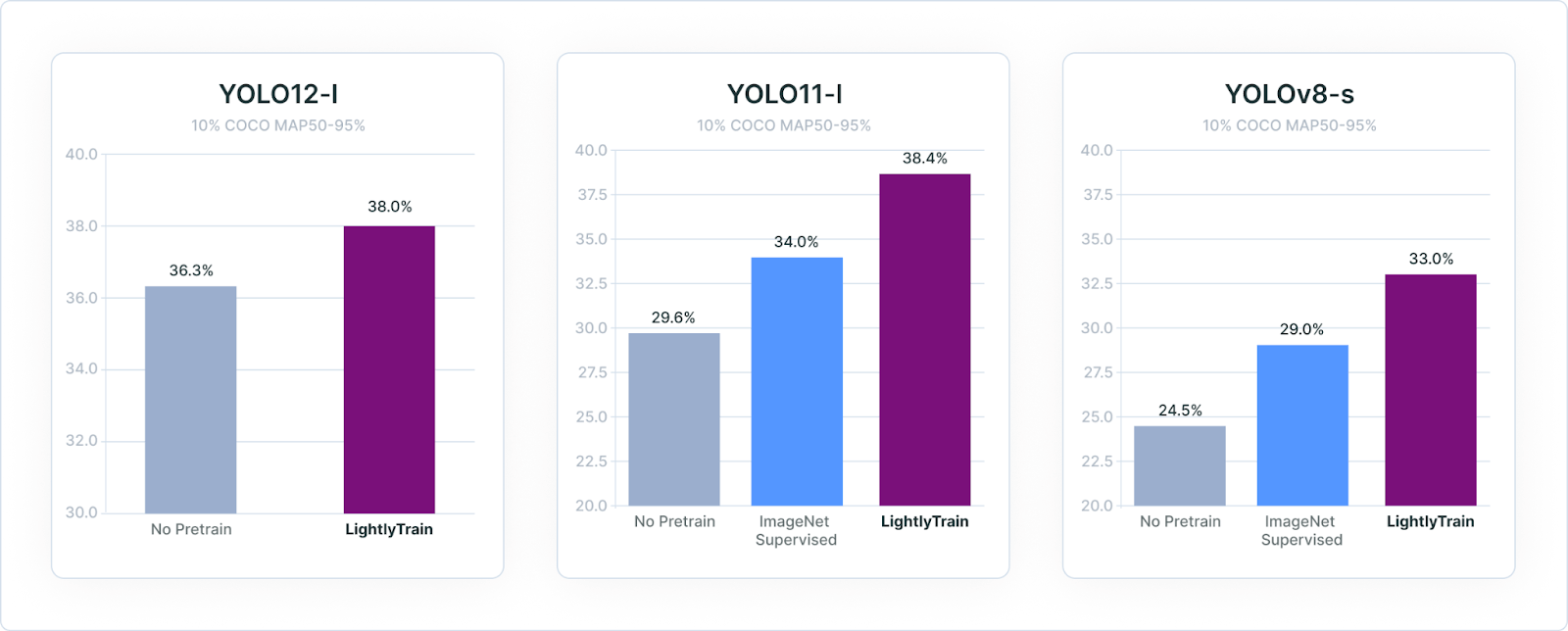

- Self-Supervised Pretraining: LightlyTrain enables you to pretrain vision models, including YOLO and Vision Transformers, using unlabeled data with SSL techniques such as DINOv2, DINOv3, SimCLR, and DINO.

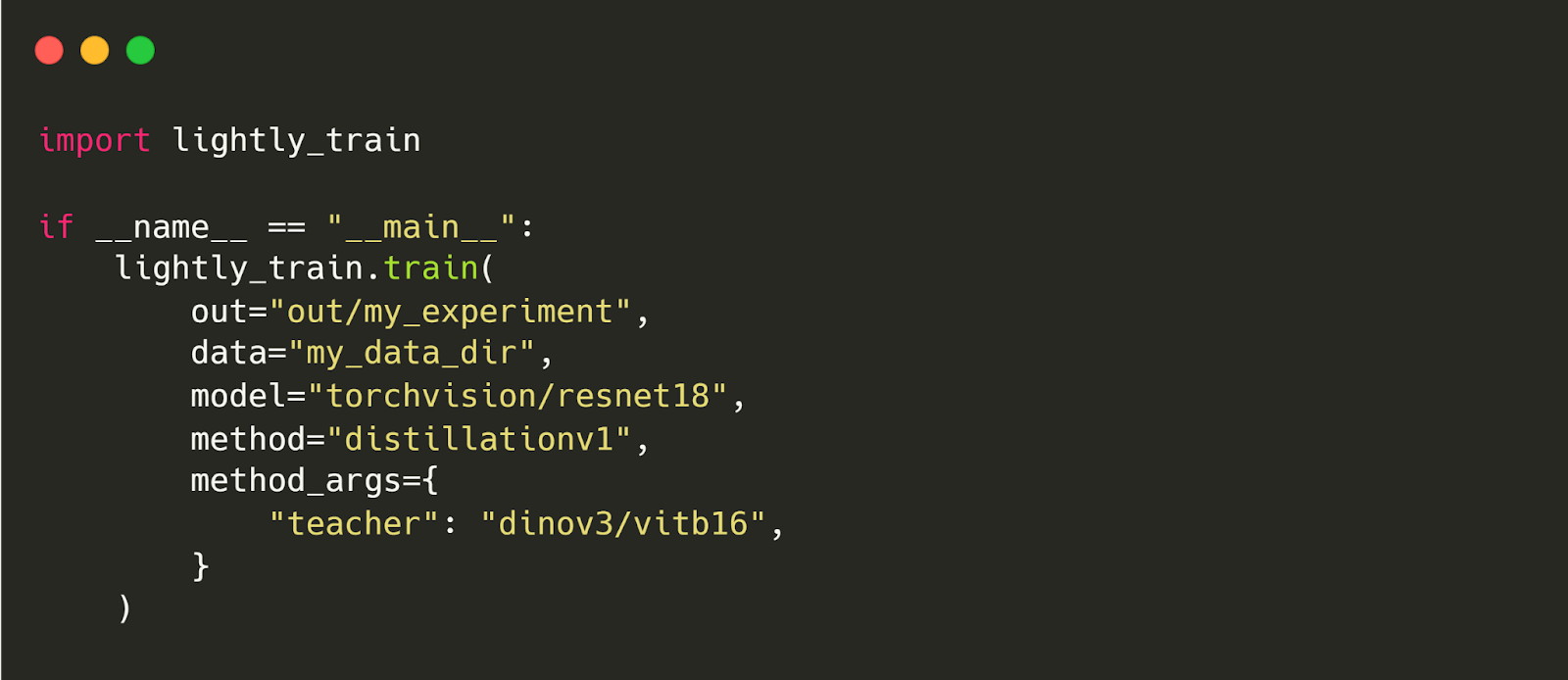

- Knowledge Distillation: LightlyTrain supports distilling knowledge from large foundation models (DINOv2 or DINOv3) into smaller, more efficient ones. For example, YOLOv8-s models pretrained on the COCO dataset with LightlyTrain achieve high performance across all tested label fractions. And these benefits extend to other models like YOLO12, RT-DETR, and Faster R-CNN.

- Flexibility Across Model Architectures: You can pretrained models from Ultralytics, Torchvision, TIMM, SuperGradients, RT-DETR, and more.

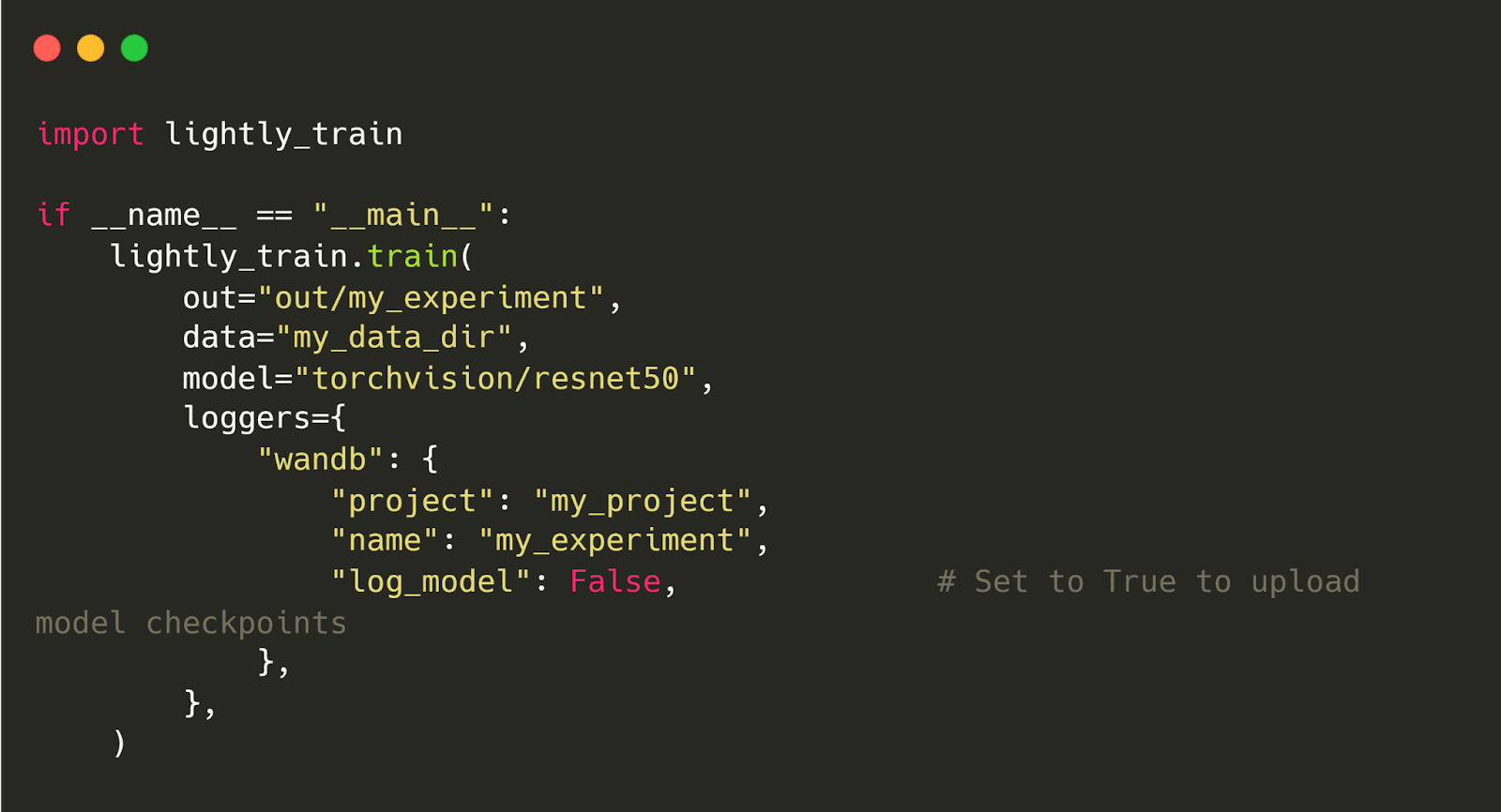

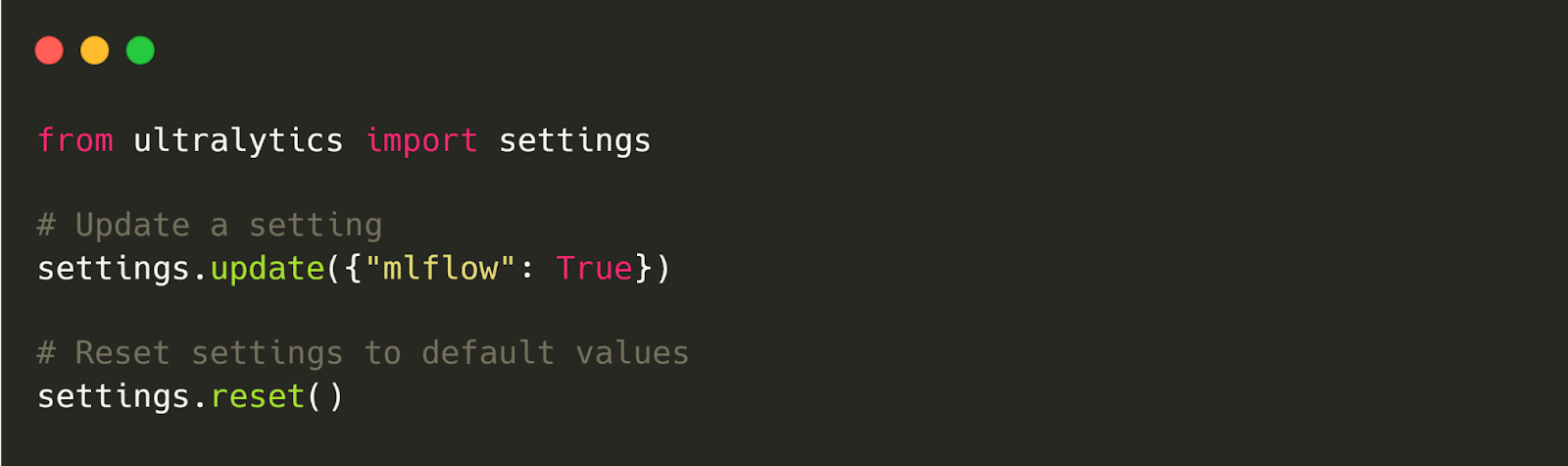

- Training Monitoring: LightlyTrain works with tools like TensorBoard, Weights & Biases (wandb), and MLFlow for experiment tracking and visualization of the training progress. The wandb logger can be configured with the following arguments.

Ultralytics

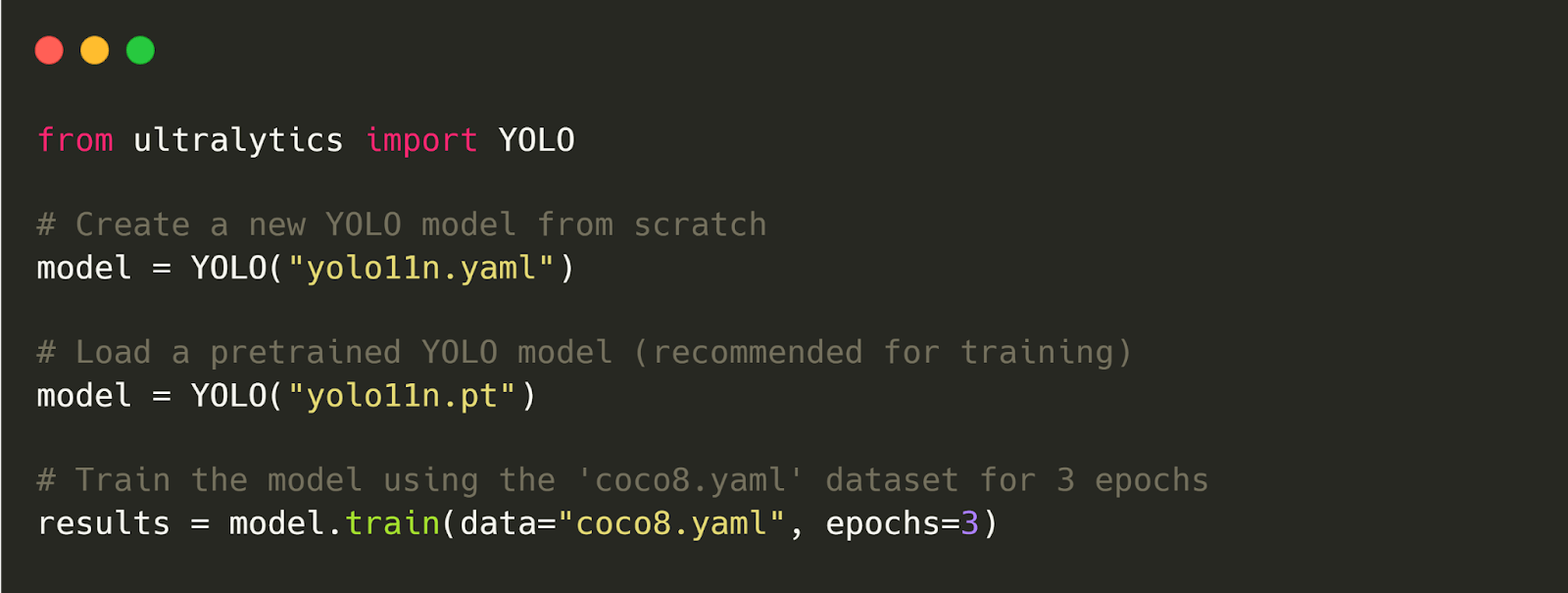

Ultralytics focuses on the fine-tuning (or transfer learning) of YOLO models for quick use and deployment.

- Pretrained YOLO Models: They offer a range of pretrained YOLO models that have already been trained on large datasets such as COCO and ImageNet. You can start with these pre-trained models and adjust them using your own data to save time and compute.

- Transfer Learning: YOLO pretrained weights make transfer learning simpler for your specific needs. You can use the default pre-trained weights (set pretrained=True) or your own custom weights.

- No-Code Training via HUB: Ultralytics HUB allows you to train YOLO models without requiring any code. You can upload your data, choose a model, set training options, and start the training.

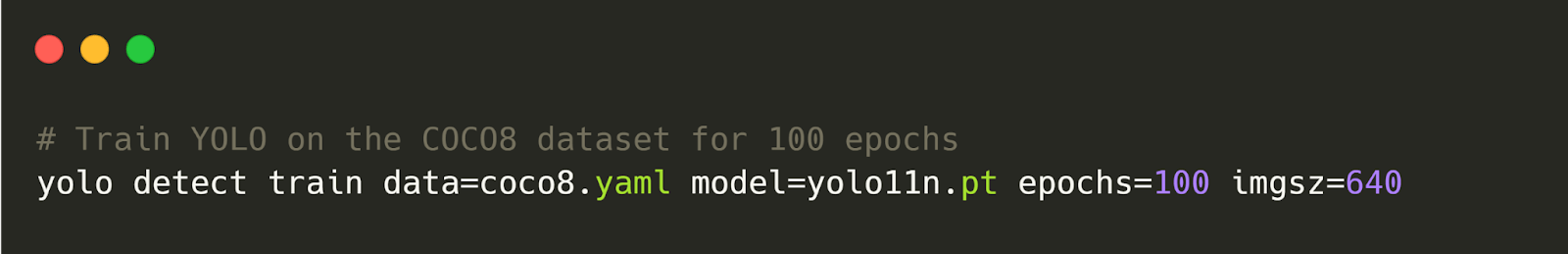

- Hyperparameter Configuration: Ultralytics offers extensive hyperparameter configuration options like learning rates, batch sizes, and augmentation strategies to help improve your model's performance. You can do this through YAML files or command-line (CLI) arguments. Here is an example to train a detection model through CLI.

- Multi-Task Support: YOLO models can handle various tasks in computer vision, including object detection, image segmentation, image classification, pose estimation, and oriented bounding box detection.

- Training Monitoring: Ultralytics integrates with tools like MLFlow and ClearML for experiment tracking and visualization. You can keep an eye on training metrics in real-time to get insights for improving your model.

Deployment and MLOps

Lightly

Lightly is a good choice when you need a customizable pipeline as it gives more control, customization options, and on-premises security.

LightlyStudio automates data preparation and speeds up data labeling. LightlyTrain exports models in their original formats so they can be deployed with different systems. And also lets you have the flexibility to choose your preferred deployment tools.

Both came up with API and Python SDK support that fit into such MLops stacks. You can use them to program an active learning loop: select data, send it for labeling, trigger a training job, evaluate the new model, and re-score the raw data pool.

Lightly supports on-premise deployments (Docker for easy setup) and cloud-based workflows.

For security, Lightly offers strong access controls and is suitable for environments with strict privacy or regulatory requirements since it doesn’t rely on cloud services unnecessarily.

Ultralytics

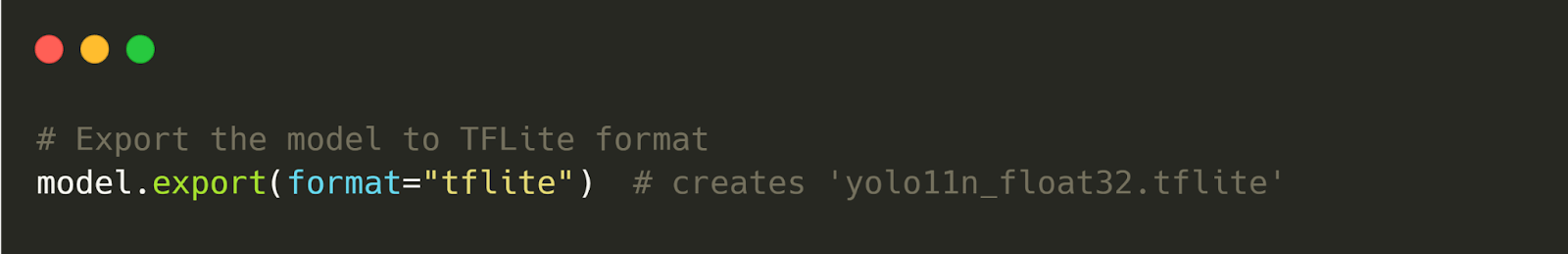

Ultralytics offers a variety of deployment options that support multiple platforms and formats.

It allows exporting models to over 15 formats like ONNX, TensorRT, OpenVINO, TFLite, and others, to deploy models across cloud, edge, and mobile devices.

For edge device deployment, YOLO models use platforms like ExecuTorch and TFLite Edge TPU.

And ExecuTorch provides native PyTorch deployment optimized for mobile CPUs using the XNNPACK backend. It also supports iOS, Android, and embedded Linux systems.

Ultralytics also provides cloud training capabilities, but these are available through HUB Pro and Enterprise plans. It allows you to train models on cloud GPU resources with pay-per-use pricing based on GPU consumption.

Here is the summarizing table comparison table at a glance:

Practical Implications and Use Cases

The choice between Lightly and Ultralytics impacts more than just feature comparison. It affects your team's workflow, project scalability, and overall return on investment.

Enterprise Workflow Impact

Lightly and Ultralytics can greatly shape your organization's ML workflow.

Lightly

Lightly is great for situations where you have a huge amount of data to manage.

For example, when working with petabytes of video data for outdoor activities, you can use Lightly to automatically curate diverse frames (such as night, rain, or rare events) and reduce labeling costs.

This way, your engineers can focus more on improving the models instead of spending all their time labeling data.

Ultralytics

On the other hand, Ultralytics provides a quicker way to get a working model up and running.

For instance, a retail security startup can upload a reasonable number of store images and have a YOLOv8 model ready to detect shoplifting or check inventory within hours.

But the downside is that without proper data curation, they might end up labeling many images upfront, though they gain speed in the initial deployment.

Scalability and ROI (Return on Investment)

The return on investment differs between the two platforms.

Lightly's Cost Efficiency

Lightly reduces the total cost of ownership by lowering annotation labor and avoiding the need to retrain models (since it guides the process with data insights).

For instance, Aigen achieved an 80–90% reduction in dataset size by focusing on the right data, thereby 2× their deployment efficiency. That translates to considerable savings in labeling time and storage.

Ultralytics' Speed to Value

In contrast, Ultralytics offers ROI in terms of speed and accessibility with its low barrier to entry.

It enables teams with little ML expertise to quickly create a functional model that can be good enough to start generating business value, like automating a task. This can be done without upfront investment in ML operations infrastructure.

Choosing the Right Tool for the Job

The choice between Lightly and Ultralytics may come down to the nature of your project. Here are some points to consider when selecting the best option for your use case.

When to Use Lightly:

- Your primary challenge is the cost of data collection and labeling.

- You are dealing with large, raw, unlabeled, or highly redundant datasets, like video feeds.

- You operate in a domain-specific field such as medical, agriculture, automotive, or autonomous driving, where generic models perform poorly.

- You have high-security or data-privacy requirements and must deploy on-premise, with no data leaving your infrastructure.

- Your goal is to build a best-in-class, continuously improving model with the best long-term performance and lowest total cost of ownership (TCO).

When to Use Ultralytics:

- Your bottleneck is model development speed and deployment.

- You have a well-defined, modest-sized, and already labeled dataset.

- Your task is a common one (detecting people, cars, or common objects) where a fine-tuned YOLO model is sufficient.

- You need to ship a proof-of-concept or MVP (minimum viable product) fast to prove business value.

- Your goal is speed-to-value, and you require flexible deployment options (cloud API, mobile, edge).

However, many ML teams use both tools together to maximize performance and efficiency. For example, you can start with LightlyStudio to analyze the entire raw data pool and extract a small set of high-value images.

Next, use LightlyTrain to pretrain and distill knowledge from DINOv3 into the YOLOv8 student model (or any YOLO model) to create a custom, domain-specific base model with a 14% mAP head start.

Once you have a strong, customized base model, fine-tune it on your curated labeled set (select from LightlyStudio) with Ultralytics.

Finally, deploy your trained model to any supported format, for edge inference, mobile, or cloud, using Ultralytics' export functions.

💡 Pro Tip: To decide which data workflow tools best support your data quality goals and integrate well with curated datasets, consult Best Data Curation Tools for recommended systems and practices.

Final Thought

Choosing between Lightly and Ultralytics means comparing different AI approaches.

Ultralytics is all about the model architectures and makes them fast and simple to use. Lightly focuses on the data used to train the models, built on the principle that better data leads to better models.

Both model architecture and data quality are critical components for success in production AI.

As AI models grow common, the key to staying competitive in the long run is the quality and quantity of the training data, not the model itself.

Lightly's scalable, secure data-first system tackles future complex, petabyte-scale challenges, giving companies a lasting edge rooted in their data.

Stay ahead in computer vision

Get exclusive insights, tips, and updates from the Lightly.ai team.

.png)

.png)

.png)