PyTorch vs. Tensorflow: Which Framework to Choose

Table of contents

Choosing between PyTorch and TensorFlow depends on your goals. PyTorch is favored in research and prototyping due to its intuitive syntax and dynamic computation. TensorFlow stands out in production with strong deployment tools and support for mobile. PyTorch is easier for beginners, while TensorFlow offers scalability for complex systems. Both remain relevant in 2025, serving different developer needs.

Is PyTorch better than TensorFlow?

Not universally. It depends on the use case.

- PyTorch excels in research and prototyping thanks to its Pythonic syntax and dynamic graph.

- TensorFlow is production-ready with strong deployment, mobile support, and visualization tools like TensorBoard.

Which is easier to learn: PyTorch or TensorFlow?

- PyTorch is often praised for its simplicity and ease of debugging - better for beginners.

- TensorFlow has a steeper learning curve, especially for those unfamiliar with static graphs or graph execution models.

Is PyTorch worth learning in 2025?

Yes. It’s widely used in academia, research, and increasingly in industry - especially by companies like OpenAI and Meta.

Is TensorFlow worth learning in 2025?

Absolutely, especially if you're targeting enterprise-grade, large-scale, or mobile deployment systems. TensorFlow’s ecosystem is vast.

Does OpenAI use PyTorch or TensorFlow?

As of 2020, OpenAI has standardized on PyTorch.

Is TensorFlow still relevant?

Yes. Despite PyTorch's surge, TensorFlow remains a dominant choice for production pipelines, mobile deployment (via TensorFlow Lite), and scalable architectures.

Can you switch between PyTorch and TensorFlow easily?

Switching requires some re-learning due to differences in architecture, especially in how they define and execute computation graphs.

Choosing the right deep learning framework is one of the first and most important decisions a machine learning engineer or researcher will make. Meta’s PyTorch and Google’s Tensorflow are the dominant players in deep learning frameworks, which are widely adopted.

Whether you're building experimental models or scaling to production, understanding the strengths and trade-offs of PyTorch vs. TensorFlow can help you choose the right tool for the job.

In this post, we will discuss everything from the architecture and training performance to deployment and ease of use.

- What is Pytorch & Tensorflow: Key Features

- PyTorch vs. TensorFlow: A comparison

- Use Cases and Industry Applications

- How to Choose the Right Framework: Checklist

You can take a quick glance at key differences between PyTorch and TensorFlow below.

Whether you're experimenting in PyTorch or scaling in TensorFlow, these tools can streamline your computer vision workflows.

And if you landed here looking to also learn about computer vision tools to complement your PyTorch or TensorFlow workflow - check out LightlyOne and LightlyTrain.

- LightlyOne helps you select the most valuable images from your dataset, reducing labeling costs without sacrificing performance.

- LightlyTrain enables self-supervised pretraining on unlabeled data, giving your models a head start before fine-tuning.

Whether you’re iterating in notebooks with PyTorch or building scalable systems with TensorFlow, Lightly tools can accelerate your model development.

See Lightly in Action

Curate and label data, fine-tune foundation models — all in one platform.

Book a Demo

What is PyTorch?

PyTorch was developed by Facebook’s AI Research lab (FAIR) and released as an open-source project in 2016. It quickly became the go-to framework in the research community thanks to its flexibility, Pythonic design, and dynamic computation graph.

Key Features

- Intuitive Syntax: Easy to learn for Python/Numpy users.

- Strong Research Ecosystem: Widely used in academic papers and experimental models.

- Flexible: Enables rapid prototyping and custom logic.

- Dynamic Computation Graph (Define-by-Run): Makes model building and debugging intuitive.

What is TensorFlow?

TensorFlow was developed by Google Brain and open-sourced in 2015. Initially built with production scalability in mind, TensorFlow was adopted quickly by enterprises building large-scale ML systems.

Key Features

- TensorFlow Serving, Lite, and JS: Streamlined deployment across server, mobile, and browser.

- Keras Integration: Simplifies model creation while retaining access to low-level APIs.

- Cross-Platform & Language Support: Well-supported in Python, C++, Java, and JavaScript.

- XLA Compiler & TPU Support: Designed for high-performance training and inference.

PyTorch vs. TensorFlow: A Comparison

While PyTorch and Tensorflow can be used for training machine learning models, they differ in architecture, usability and deployment capabilities.

Let’s look at the key areas.

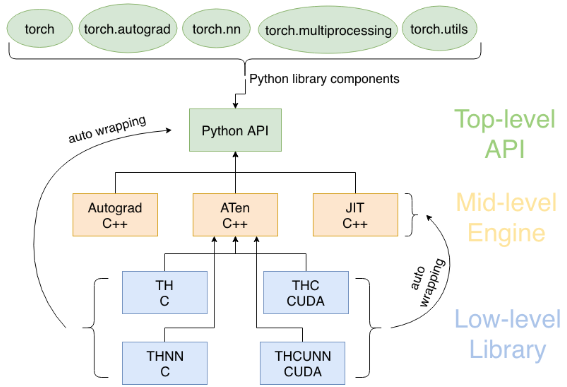

Architecture

PyTorch is built around a dynamic computational graphs model, often referred to as define-by-run. This means that the computation graph is created on the fly as operations are executed. The structure of the model is entirely defined in real-time, which makes debugging and experimentation extremely intuitive, especially for researchers and those familiar with Python.

Core components like autograd for automatic differentiation and nn.Module for model organization make PyTorch feel like native Python.

Because of its dynamic nature, PyTorch is favoured in use cases that demand flexibility, such as NLP, reinforcement learning, or models with variable input/output shapes.

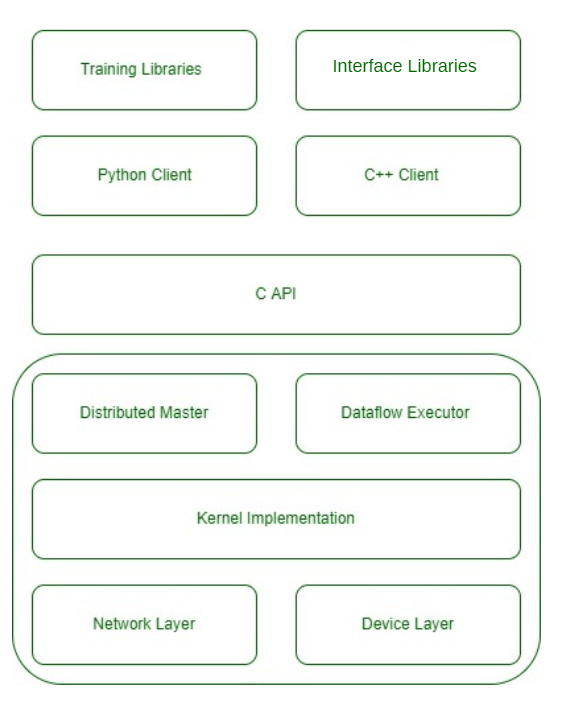

TensorFlow, in contrast, was originally designed around a static computation graph model, often described as define-then-run. In this the entire computation graph is built then executed. This enabled compiler optimization and better performance in production. But still it made model development more complex.

TensorFlow includes a broad ecosystem of tools that reinforce its architectural design. tf.data handles efficient data pipelines, while tf.Tensor provides the base numerical type for computation. When it comes to performance, the XLA (Accelerated Linear Algebra) compiler enables further optimizations by transforming computation graphs into highly efficient executable code, particularly useful for TPUs and GPUs.

This architecture makes TensorFlow particularly well-suited for production-grade systems, large-scale deployments, and applications that demand cross-platform support.

Ease of Use

PyTorch is known for its clean and Pythonic syntax. This makes the debugging also straightforward.

TensorFlow has improved significantly with the integration of Keras. It offers high level APIs that makes it less complex than earlier. However, working with low-level components or custom training loops can still feel rigid.

PyTorch is often preferred by beginners and researchers while TensorFlow suits those looking to scale quickly with structured APIs.

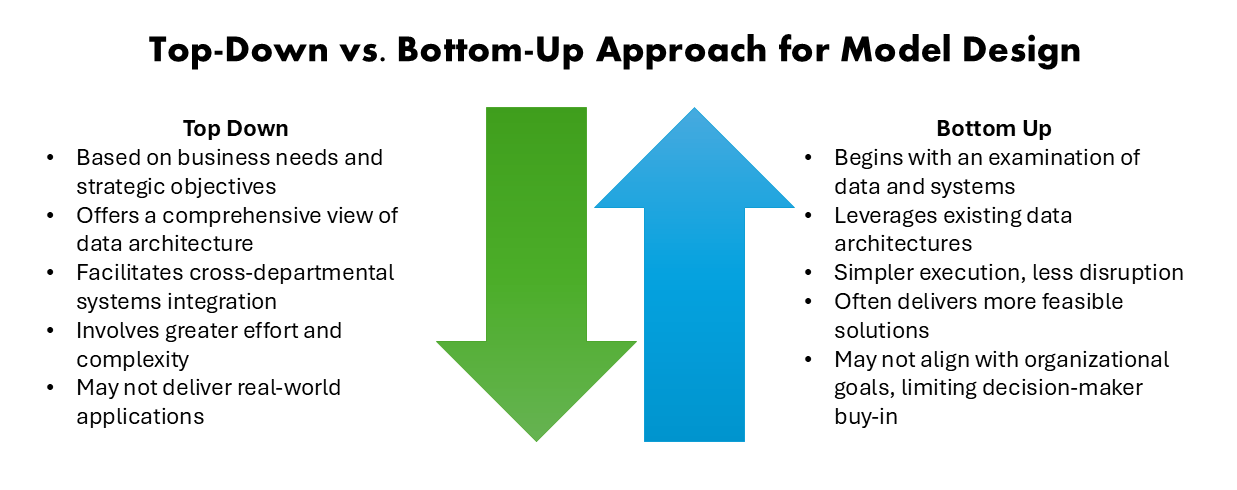

Model Building

The PyTorch framework uses a bottom up approach. This means you build models from raw building blocks and this allows greater flexibility and easier debugging.

The TensorFlow framework with Keras uses a top down approach. This allows the developers to quickly stack layers and run experiments using predefined modules.

The PyTorch approach feels more natural. But in the case of practical model building pipelines, TensorFlow’s high-level APIs shine.

Training Performance & Speed

PyTorch often leads in training speed. Benchmarks indicate that PyTorch completes training tasks more quickly than TensorFlow, especially when utilizing CUDA for GPU acceleration. This is because PyTorch uses GPU resources more efficiently and its streamlined execution path.

While there may be slightly longer training times in certain scenarios, TensorFlow’s architecture allows for extensive graph-level optimizations. This leads to efficient execution in complex models. Also, TensorFlow’s support for TPUs and distributed training makes it a stronger choice for enterprise-level applications needing scalability and performance.

In terms of memory usage, TensorFlow tends to be more efficient and uses less RAM during training compared to PyTorch. This efficiency is useful when working with large datasets. However, PyTorch’s higher memory consumption is often offset by its faster training times and ease of use.

Production Deployment and Model Serving

When it comes to production deployment, Tensorflow has a clear edge because of its ecosystem. TernsorFlow Serving offers a scalable solution for deploying models via REST or gRPC APIs making it easy to integrate with existing production systems. TensorFlow Lite enables model optimization for mobile and edge devices. Tools like these make TensorFlow a go to choice for production-grade machine learning.

While PyTorch is traditionally preferred in research, it still has made significant strides in production. Tools like TorchServe provide a native serving solution with support for REST APIs, model versioning, and monitoring. PyTorch also supports cross platform deployment with its ONNX support. Even if its ecosystem is not as comprehensive as TensorFlow’s, it is still a viable option for real-world computer vision applications.

Your choice may ultimately depend on whether flexibility or end-to-end tooling is more important for your use case.

Pro tip: Check out Top Computer Vision Tools for ML Engineers in 2025.

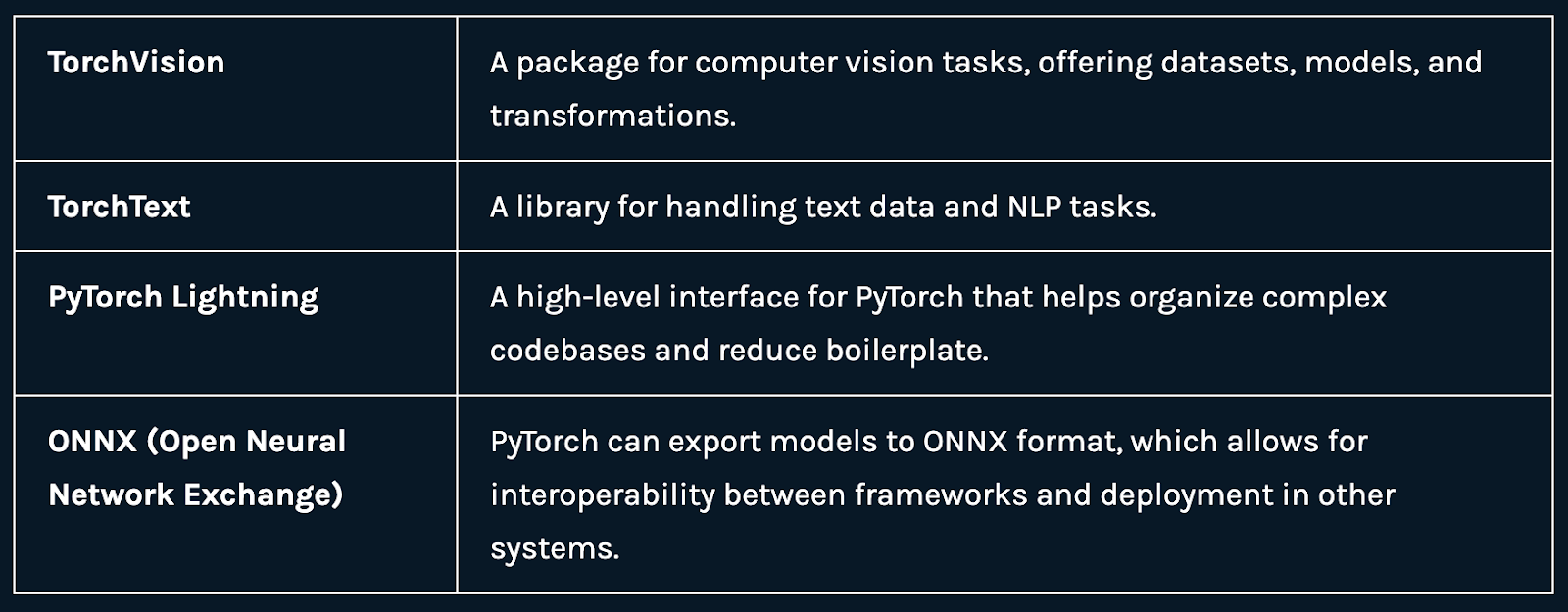

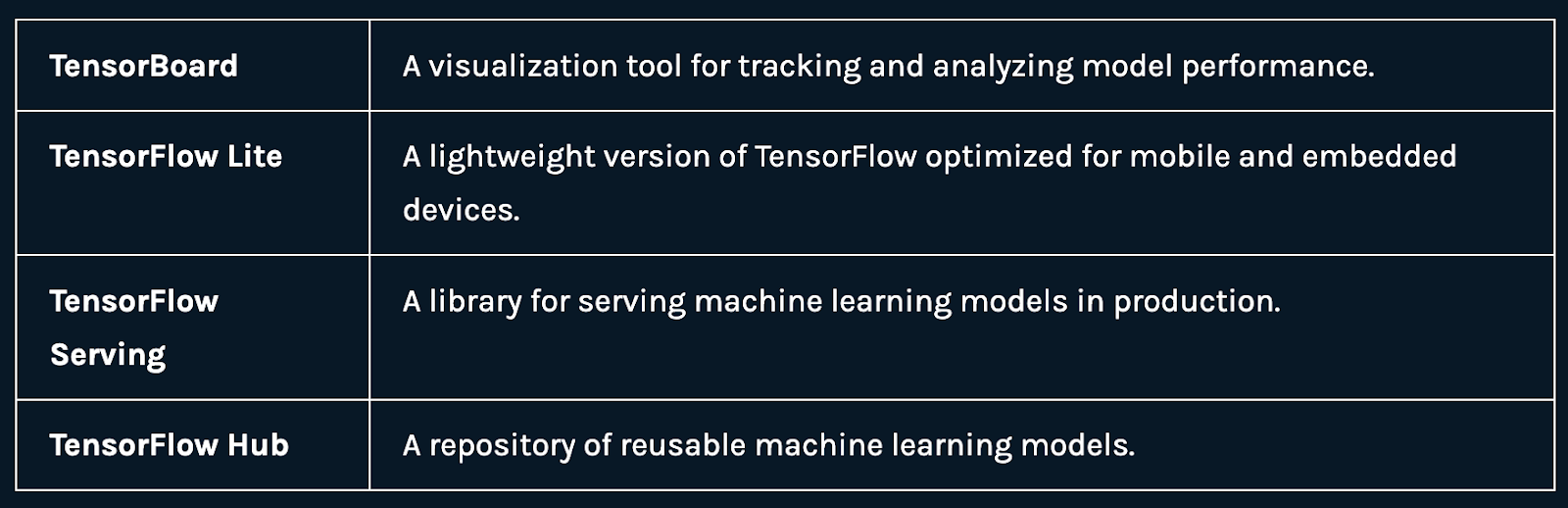

Ecosystem & Tooling Support

TensorFlow provides an end-to-end ecosystem with tools like TensorBoard for visualization, TensorFlow Lite for mobile deployment and TFX for production pipelines. Its tight integration with Google Cloud and the TPU support makes it a strong choice for enterprise-scale applications.

PyTorch offers more flexibility and its ecosystem is growing. Its libraries like TorchVision and PyTorch Lightning simplify model development. TorchServe handles deployment. Though less unified than TensorFlow, PyTorch’s modular tools are well-suited for research and experimentation.

Community, Documentation, and Learning Resources

TensorFlow has a large, established community with extensive official documentation, tutorials, and courses. Its strong industry backing makes it easy to find production-grade examples and support.

PyTorch is favored in academia and by researchers for its intuitive design and active community. With frequent releases, open-source models, and comprehensive tutorials make it ideal for rapid prototyping.

Use Cases & Industry Adoption

Both PyTorch and TensorFlow are general-purpose deep learning frameworks. However, they tend to dominate in different contexts.

💡 Pro Tip: If you are comparing deep learning frameworks for multimodal vision tasks, our OpenAI CLIP Model Explained article shows how CLIP’s PyTorch-centric ecosystem influences zero-shot workflows and deployment choices.

Here’s how they compare across specific domains:

- Computer Vision (CV): PyTorch is the backbone for models like Stable Diffusion and CLIP. The torchvision library provides essential tools for image processing tasks. TensorFlow is used for deploying models in mobile and edge devices and for browser based applications as well.

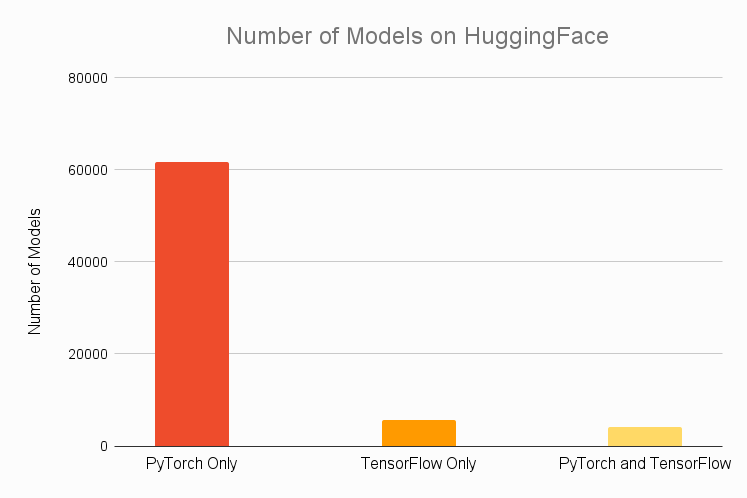

- Natural Language Processing Tasks (NLP): PyTorch dominates NLP research, with Hugging Face's Transformers library primarily built on it. It's the framework of choice for training large language models like LLaMA and GPT variants. TensorFlow is also prevalent in industry applications, particularly where integration with existing TensorFlow-based pipelines is beneficial.

- Large-Scale Models: TensorFlow is the go to framework for training and deploying large-scale deep learning models. Its tools for scalable inference are preferred. PyTorch’s support for distributed training also makes it a good choice.

- Reinforcement Learning and Others: PyTorch is favored in reinforcement learning research due to its simplicity and flexibility. Libraries like Stable-Baselines3 are built on PyTorch, facilitating RL experimentation. Use of TensorFlow in this area has declined and shifted to more flexible alternatives.

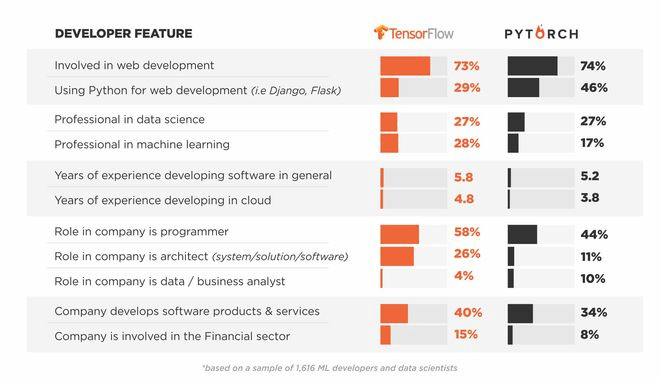

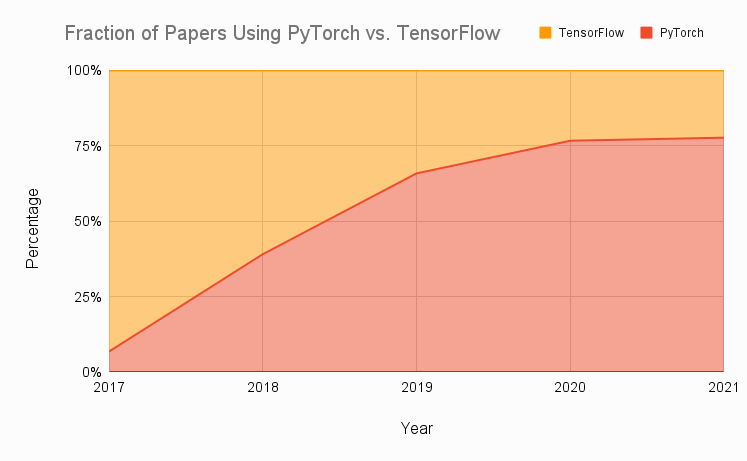

- Academic vs Industrial Projects: PyTorch is the preferred framework in academic research, with a significant majority of new deep learning projects and research papers utilizing it. For industrial projects, TensorFlow remains a staple in production environments, especially within large enterprises that require robust deployment and scalability features.

Which One Should I Choose?

Choose PyTorch if you prioritize flexibility, rapid prototyping, and research-oriented projects. It is ideal for experimenting with custom architectures and for running projects which require frequent model changes. It gives you fine-grained control over the training process.

Opt for TensorFlow if you need a mature production-ready framework with strong support for deployment at scale. Its ecosystem is well-suited for applications where stability, scalability, and integration with existing infrastructure are critical.

Ultimately, both frameworks continue to evolve. Consider your team’s expertise, project goals, and deployment needs when deciding. For many developers, starting with PyTorch for development and then exporting to TensorFlow or ONNX for production is a practical approach.

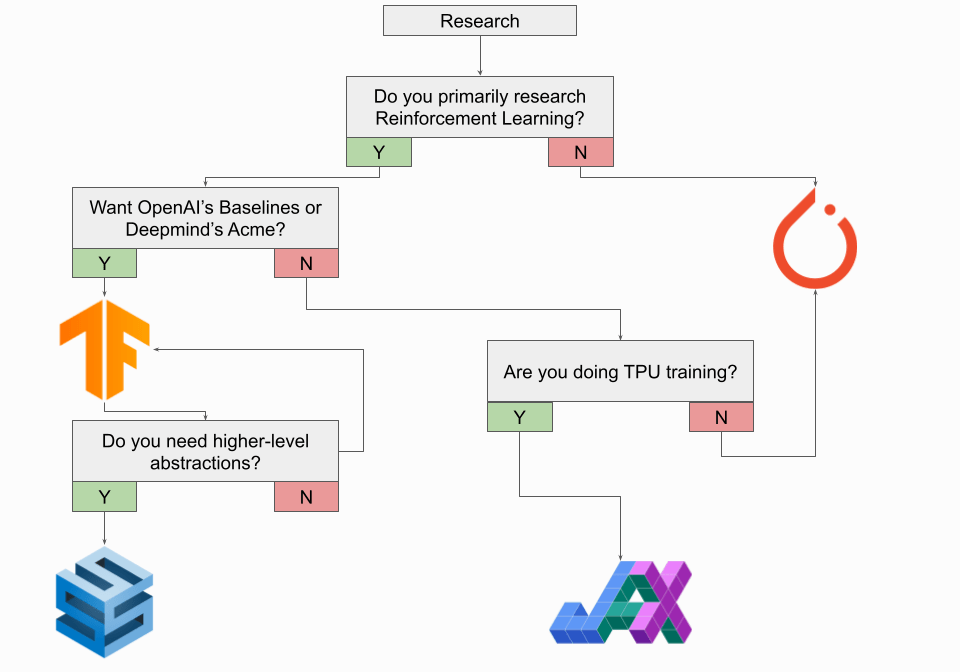

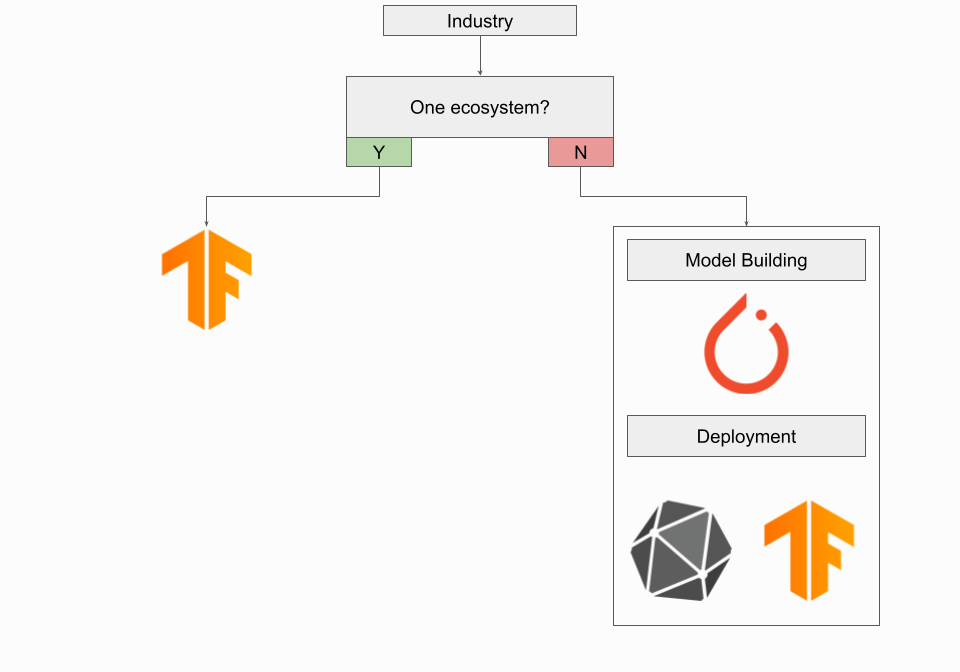

Finally, here’s a quick cheatsheet for deciding which framework would fit you best.

- PyTorch is ideal for research, experimentation, and custom model building.

- TensorFlow is better for production, scalability, and cross-platform deployment.

- PyTorch offers easier debugging and a more intuitive, Pythonic API.

- TensorFlow provides stronger tools for mobile, web, and enterprise use cases.

- Choose PyTorch for flexibility, TensorFlow for deployment or use both strategically.

Discuss this Post

If you have any questions about this blog post, start a discussion on Lightly's Discord.

Stay ahead in computer vision

Get exclusive insights, tips, and updates from the Lightly.ai team.

.png)

.png)

.png)